AVL Trees

Learn via video course

Overview

AVL (Adelson-Velsky and Landis) Tree is a self-balancing binary search tree that can perform certain operations in logarithmic time. It exhibits height-balancing property by associating each node of the tree with a balance factor and making sure that it stays between -1 and 1 by performing certain tree rotations.

This property prevents the Binary Search Tree from getting skewed, thereby achieving a minimal height tree that provides logarithmic time complexity for some major operations such as searching.

Takeaways

The height of the AVL tree is always balanced. The height never grows beyond log N, where N is the total number of nodes in the tree.

Introduction

In our fast-paced daily lives, we make use of a lot of different data structures and algorithms without even noticing them. For example, consider a scenario that you wish to call someone from your contact list that contains a ton of data.

For this, you need to find the phone number of that individual by using a searching process. This is internally implemented using specific data structures and uses particular algorithms to provide you with the best results in an efficient manner.

This is required as the faster the search, the more convenience you get, and the faster you can connect with other people.

With time, this searching process was gradually improved by implementing and developing new data structures that eradicate or reduce the limitations of the previously used methods. One such data structure is AVL Trees. It was developed to reduce the limitations of the searching process implemented using a non-linear data structure known as Binary Search Trees.

Now, you may ask what exactly was the limitation and how AVL Trees overcome it, providing us with a better searching process in terms of efficiency. For this, let’s take a look at the Binary Search Trees (BSTs) search process, what are the limitations in this process and how the AVL Trees overcome them. Refer this article to revise searching using Binary Search Trees

What is an AVL Tree?

AVL Tree, named after its inventors Adelson-Velsky and Landis, is a self-balancing variation of the Binary Search Tree. It automatically adjusts its structure to maintain the minimum possible height after any operation. This is achieved by assigning a balance factor to each node, which is extra information used to determine the tree's balance.

The Criterion of height balancing is a principle that determines whether a Binary Search Tree is unbalanced (skewed) or not. It states that:

Tip: A Binary Search Tree is considered to be balanced if any two sibling subtrees present in the tree don’t differ in height by more than one level i.e., the difference between the height of the left subtree and the height of the right subtree for all the nodes of the tree should not exceed unity. If it exceeds unity, then the tree is known as an unbalanced tree.

Since skewed or unbalanced BSTs provide inefficient search operations, AVL Trees prevent unbalancing by defining what we call a balance factor for each node. Let's look at what exactly is this balance factor.

Highlights:

- AVL Trees were developed to achieve logarithmic time complexity in BSTs irrespective of the order in which the elements were inserted.

- AVL Tree implemented a Balancing Criteria (For all nodes, the subtrees’ height difference should be at most 1) to overcome the limitations of BST.

- It maintains its height by performing rotations whenever the balance factor of a node violates the Balancing Criteria. As a result, it has self-balancing properties.

- It exists as a balanced BST at all times, providing logarithmic time complexity for operations such as searching.

Balance Factor

The balance factor in AVL Trees is an additional value associated with each node of the tree that represents the height difference between the left and the right sub-trees of a given node. The balance factor of a given node can be represented as:

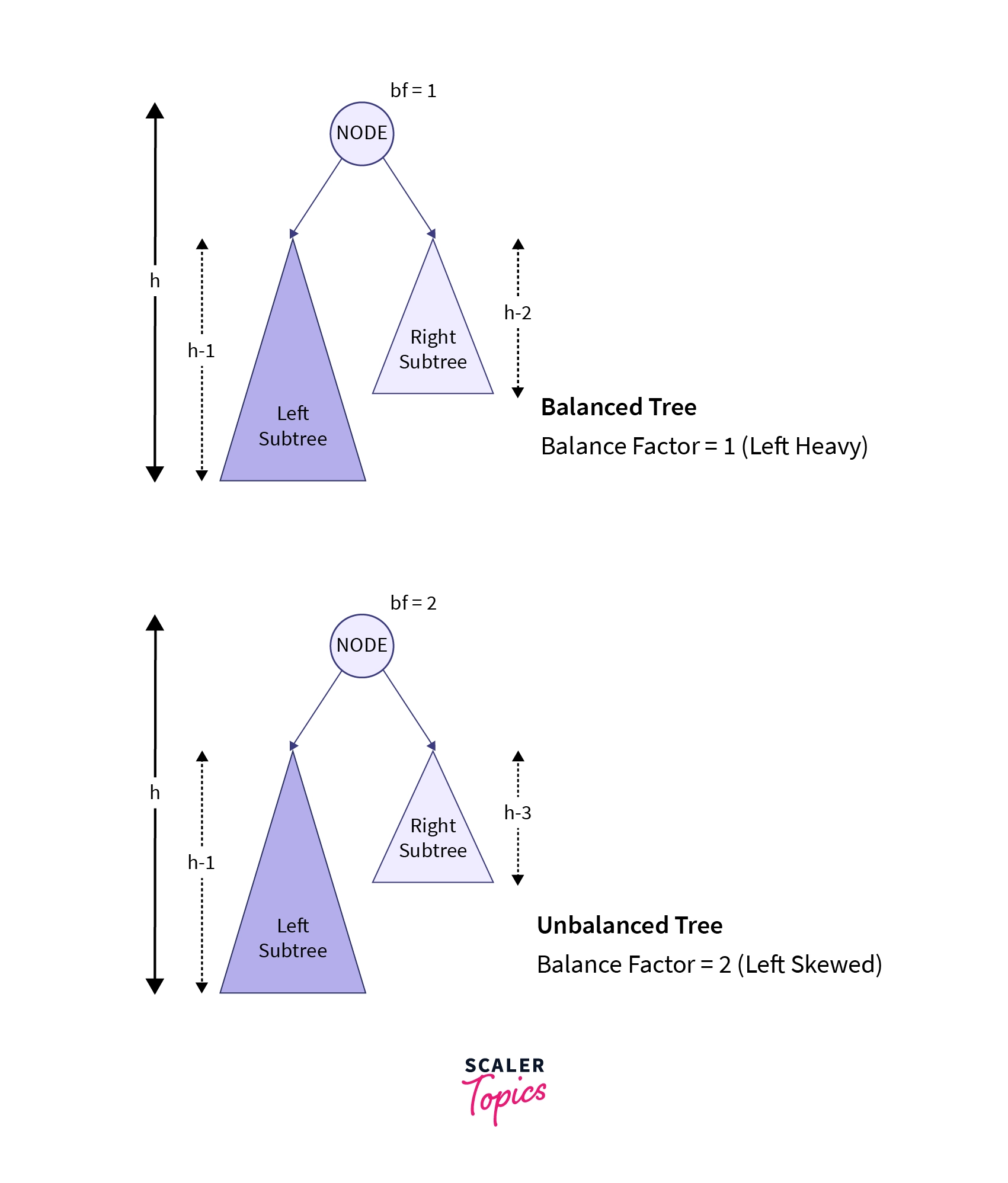

balance_factor = (Height of Left sub-tree) - (Height of right sub-tree)

Or mathematically speaking,

where bf is the balance factor of a given node in the tree, hl represents the height of the left subtree, and hr represents the height of the right subtree.

In the balanced tree example of the above illustration, we can observe that the height of the left subtree (h-1) is one greater than the height of the right subtree (h-2) of the highlighted node i.e., the given node is left-heavy having the balance factor of positive unity.

Since the balance factor of the node follows the Balancing Criteria (height difference should be at most unity), the given tree example is considered as a balanced tree.

Now, in the unbalanced tree example, we can observe that the tree is left-skewed i.e., the height of the left subtree is much greater than that on the right subtree. This is clearly an unbalanced tree as it is highly skewed. This is also indicated by the balance factor of the node as it doesn’t follow the Balancing Criteria.

Hence, AVL Trees make use of the balance factor to check whether a given node is left-heavy (height of left sub-tree is one greater than that of right sub-tree), balanced, or right-heavy (height of right sub-tree is one greater than that of left sub-tree).

Hence, using the balance factor, we can find an unbalanced node in the tree and can locate where the height-affecting operation was performed that caused the imbalance of the tree.

NOTE: Since the leaf nodes don't contain any subtrees, the balance factor for all the leaf nodes present in the Binary Search Tree is equal to 0.

Upon the execution of any height-affecting operation on the tree, if the magnitude of the balance factor of a given node exceeds unity, the specified node is said to be unbalanced as per the Balancing Criteria. This condition can be mathematically represented with the help of the given equation:

Or

Here, the above equation indicates that the balance factor of any given node can only take the value of -1, 0, and 1 for a height-balanced Binary Search Tree. To maintain this criterion for all the nodes, AVL Trees take use of certain Tree Rotations that are discussed later in this article.

Highlights:

- Balance Factor represents the height difference between the left and the right sub-trees for a given node.

- For leaf nodes, the balance factor is 0.

- AVL balance criteria: |bf| ≤ 1 for all nodes.

- Balance factor indicates whether a node is left heavy, right heavy, or balanced.

AVL Tree Rotation

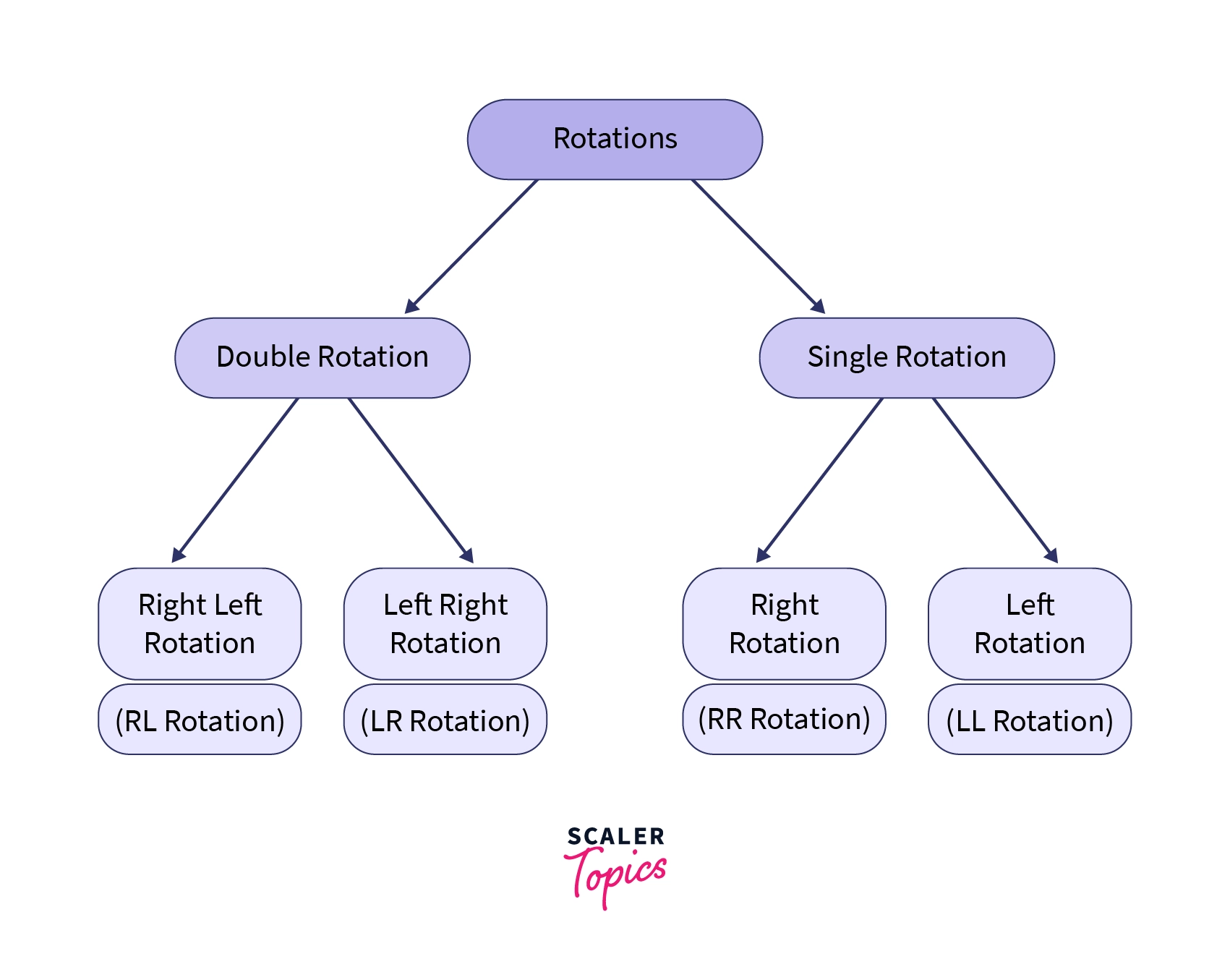

AVL Trees use the balance factor to determine whether a node is left-heavy, balanced, or right-heavy. When a node becomes unbalanced, specific tree rotations are performed to restore balance. These rotations involve rearranging the tree structure without changing the order of elements. There are four types of rotations used, each targeting a specific node imbalance caused by changes in the balance factor.

These rotations include:

Now, let's look at all the tree rotations and understand how they can be used to balance the tree and make it follow the AVL balance criterion.

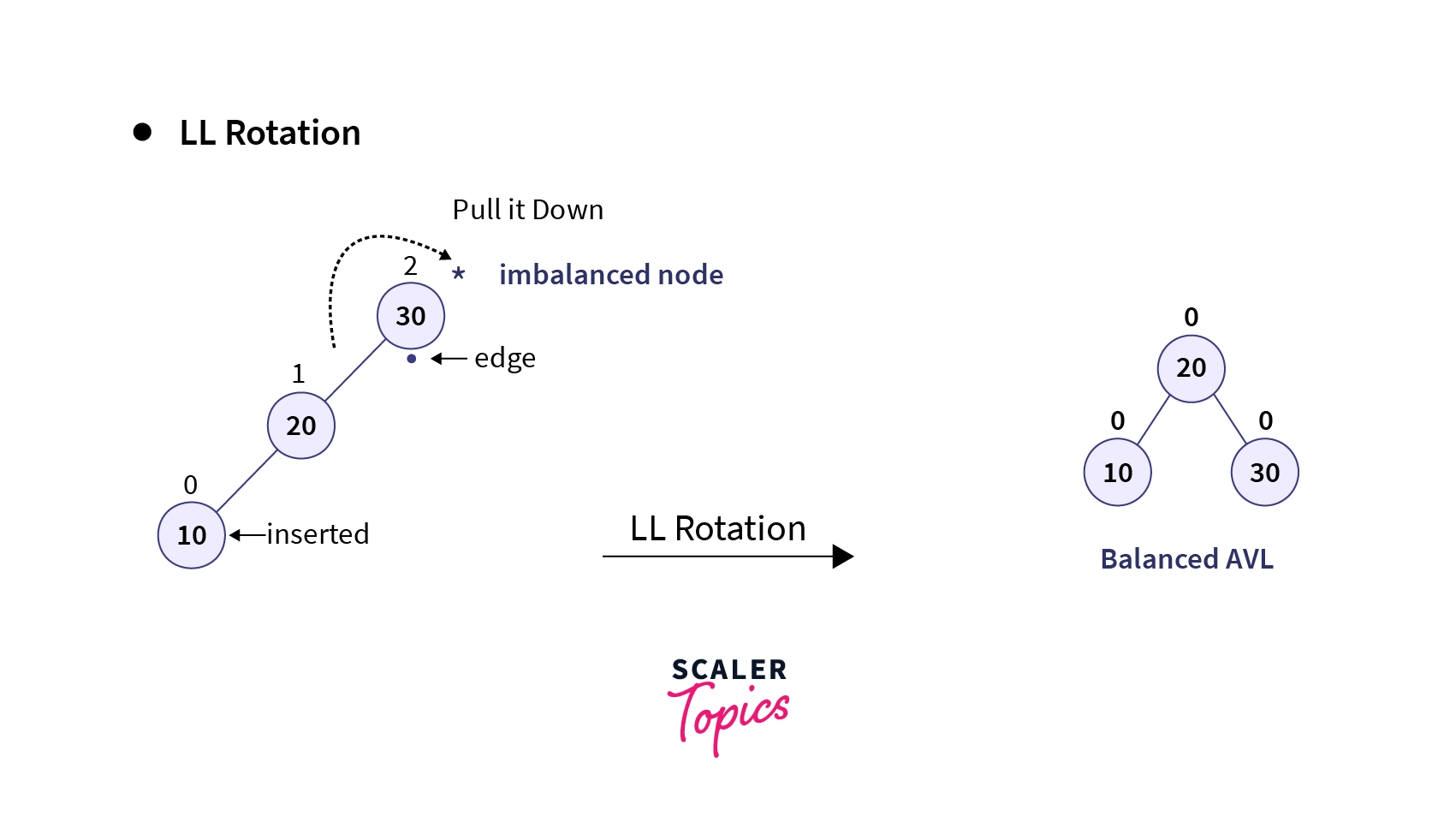

1. LL Rotation

It is a type of single rotation that is performed when the tree gets unbalanced, upon insertion of a node into the left subtree of the left child of the imbalance node i.e., upon Left-Left (LL) insertion. This imbalance indicates that the tree is heavy on the left side. Hence, a right rotation (or clockwise rotation) is applied such that this left heaviness imbalance is countered and the tree becomes a balanced tree. Let’s understand this process using an example:

Consider, a case when we wish to create a BST using elements 30, 20, and 10. Now, since these elements are given in a sorted order, the BST so formed is a left-skewed tree as shown below:

After calculating the balance factor of all nodes in the tree, it is evident that the insertion of element 10 has caused an imbalance in the root node, resulting in a left-skewed tree. This scenario represents a case of L-L insertion. To counteract the left skewness, a right rotation (clockwise rotation) is performed on the imbalanced node. This rotation shifts the heavier left subtree to the right side, restoring balance to the tree structure.

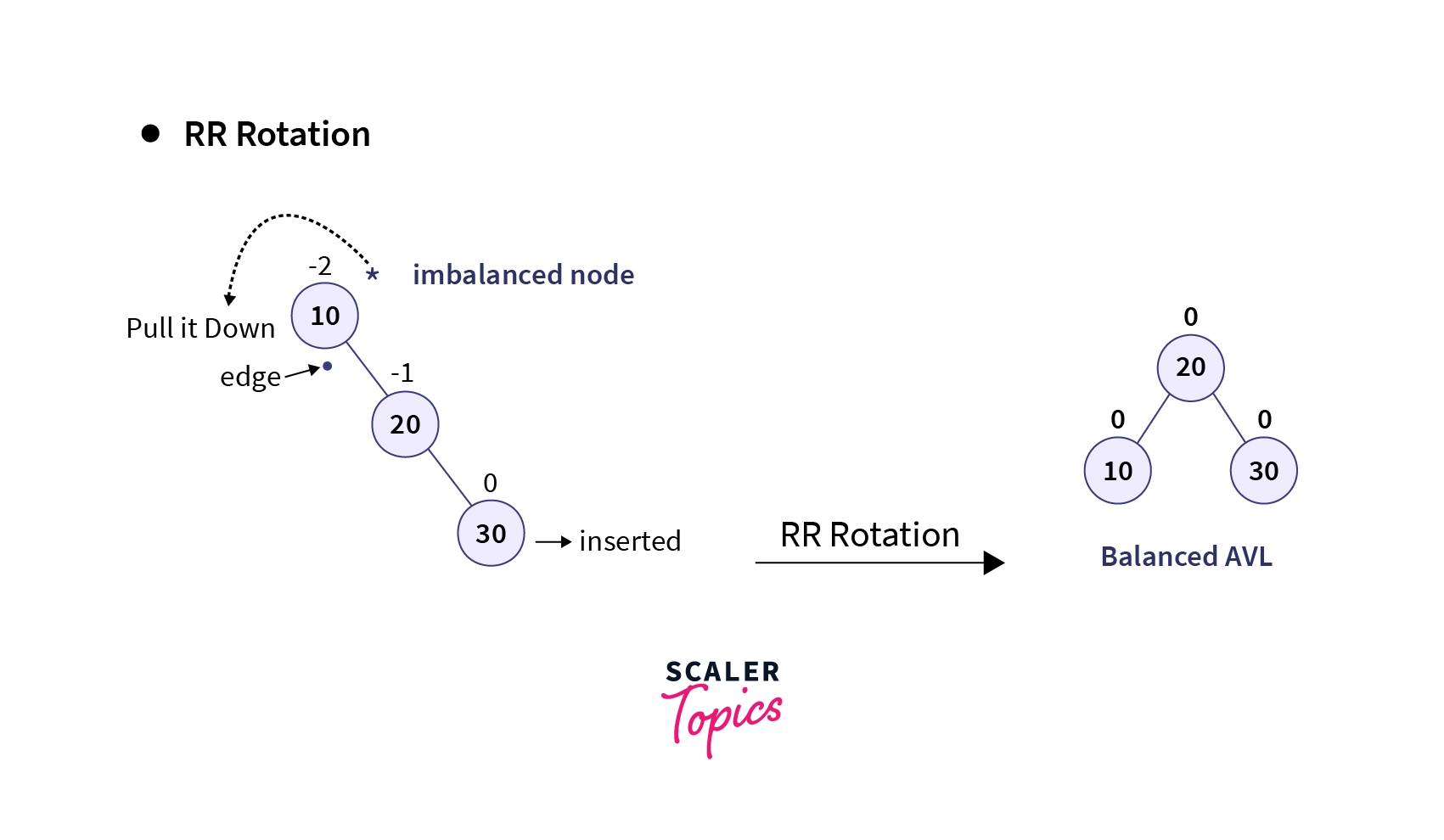

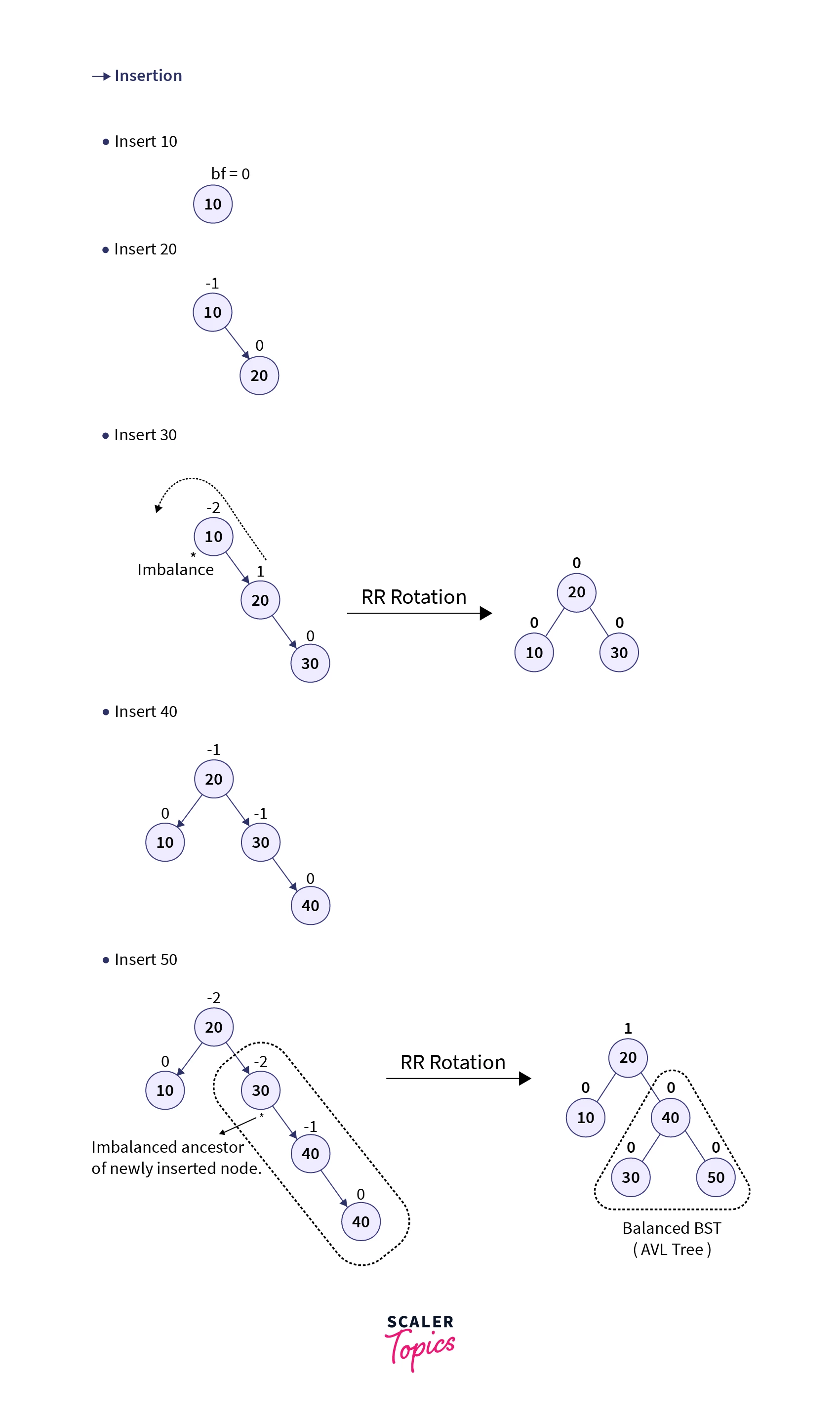

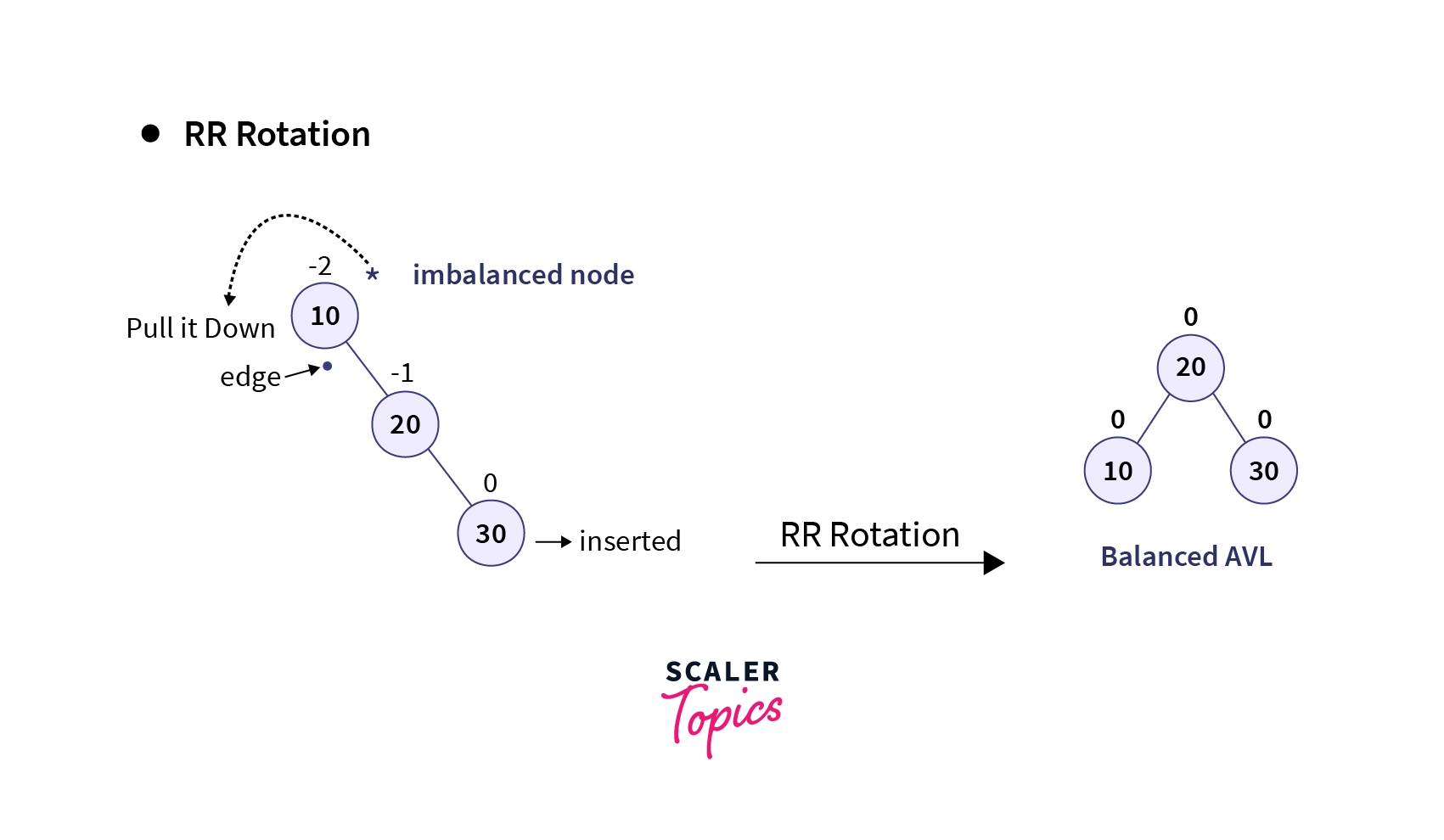

2. RR Rotation

It is similar to that of LL Rotation but in this case, the tree gets unbalanced, upon insertion of a node into the right subtree of the right child of the imbalance node i.e., upon Right-Right (RR) insertion instead of the LL insertion. In this case, the tree becomes right heavy and a left rotation (or anti-clockwise rotation) is performed along the edge of the imbalanced node to counter this right skewness caused by the insertion operation. Let’s understand this process with an example:

Consider a case where we wish to create a BST using the elements 10, 20, and 30. Now, since the elements are given in sorted order, the BST so created becomes right-skewed as shown below:

Upon calculating the balance factor of all the nodes, we can confirm that the root node of the tree is imbalanced (balance factor = 2) when the element 30 is inserted using RR-insertion.

Hence, the tree is heavier on the right side and we can balance it by transferring the imbalanced node on the left side by applying an anti-clockwise rotation around the edge (pivot point) of the imbalanced node or in this case, the root node.

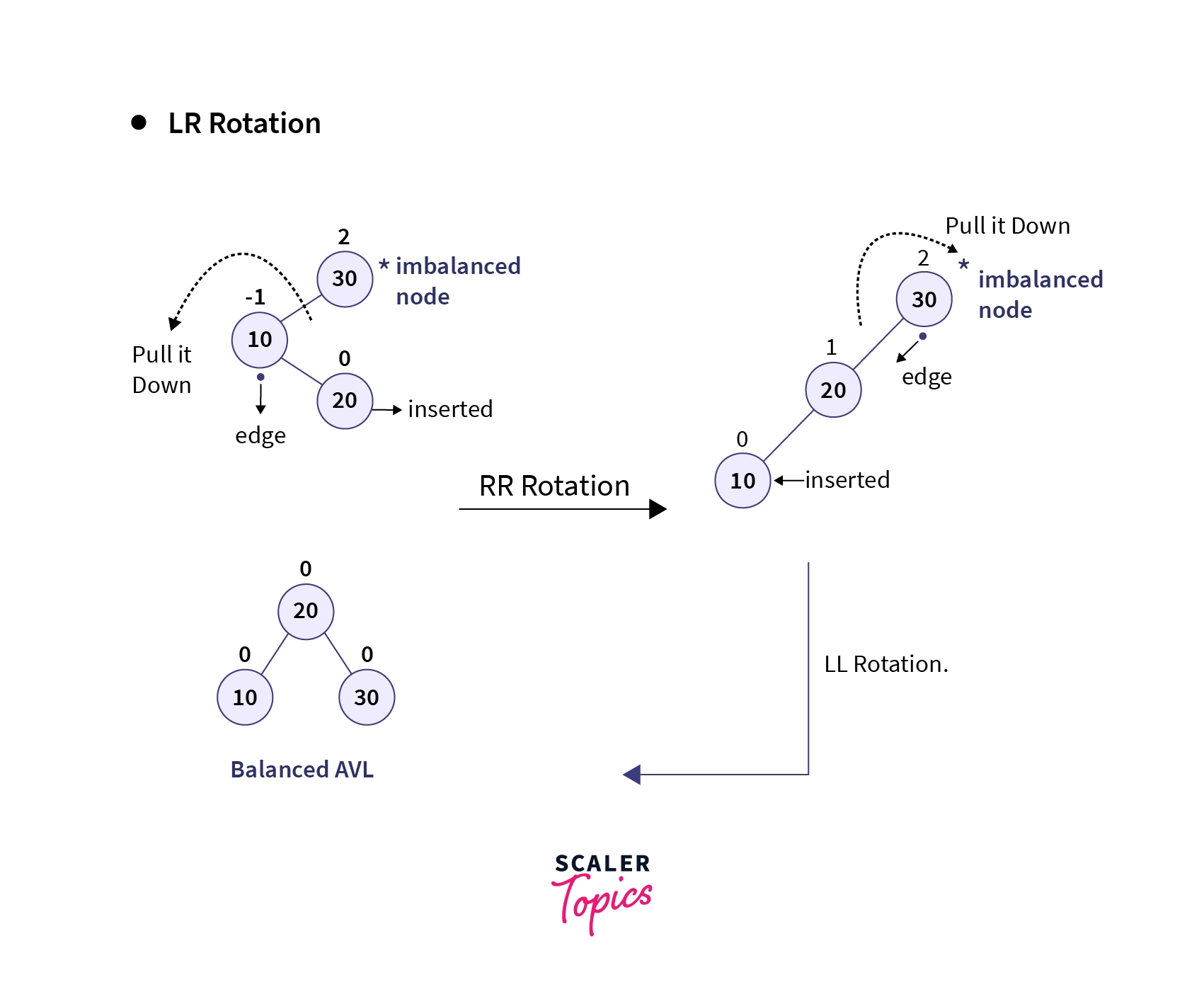

3. LR Rotation

In some cases, a single tree rotation is not sufficient to balance the tree after a height-affecting operation. One such case is the Left-Right (LR) insertion, where an imbalance occurs when a node is inserted into the right subtree of the left child of the imbalanced node. This situation can be illustrated with the example of creating a BST using elements 30, 10, and 20. Upon insertion of element 20 as the right child of the node with value 10, the tree becomes imbalanced with the root node having a balance factor of 2. Positive balance factors indicate left-heavy nodes, while negative balance factors indicate right-heavy nodes.

Now, if we notice the immediate parent of the inserted node, we notice that its balance factor is negative i.e., its right-heavy. Hence, you may say that we should perform a left rotation (RR rotation) on the immediate parent of the inserted node to counter this effect. Let’s perform this rotation and notice the change:

As you can observe, upon applying the RR rotation the BST becomes left-skewed and is still unbalanced. This is now the case of LL rotation and by rotating the tree along the edge of the imbalanced node in the clockwise direction, we can retrieve a balanced BST.

Hence, a simple rotation won’t fully balance the tree but it may flip the tree in such a manner that it gets converted into a single rotation scenario, after which we can balance the tree by performing one more tree rotation.

This process of applying two rotations sequentially one after another is known as double rotation and since in our example the insertion was Left-Right (LR) insertion, this combination of RR and LL rotation is known as LR rotation. Hence, to summarize:

The LR rotation consists of 2 steps:

- Apply RR Rotation (anti-clockwise rotation) on the left subtree of the imbalanced node as the left child of the imbalanced node is right-heavy. This process flips the tree and converts it into a left-skewed tree.

- Perform LL Rotation (clock-wise rotation) on the imbalanced node to balance the left-skewed tree.

Hence, LR rotation is essentially a combination of RR and LL Rotation.

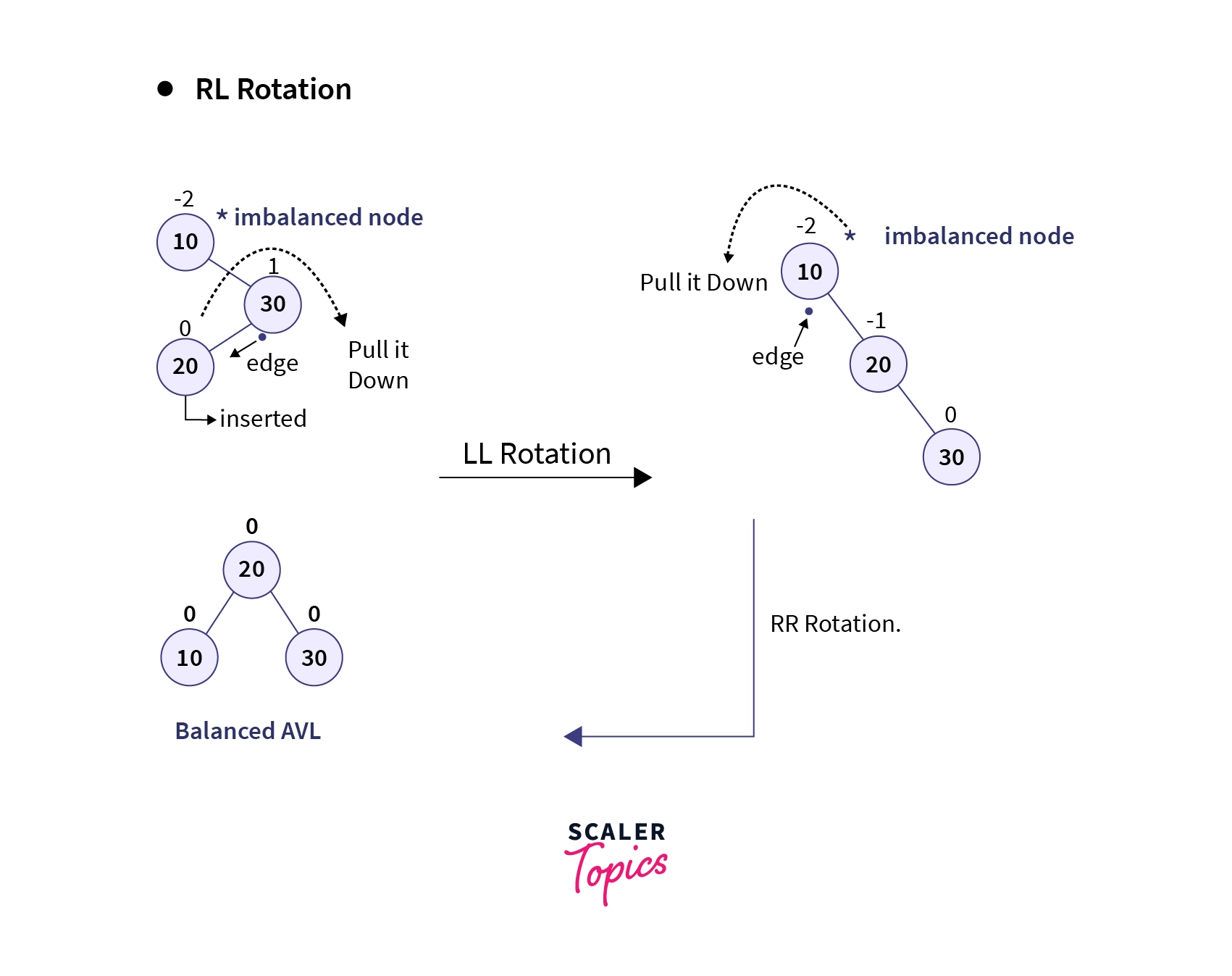

4. RL Rotation

It is similar to LR rotation but it is performed when the tree gets unbalanced, upon insertion of a node into the left subtree of the right child of the imbalance node i.e., upon Right-Left (RL) insertion instead of LR insertion. In this case, the immediate parent of the inserted node becomes left-heavy i.e., the LL rotation (right rotation or clockwise rotation) is performed that converts the tree into a right-skewed tree. After which, RR rotation (left rotation or anti-clockwise rotation) is applied around the edge of the imbalanced node to convert this right-skewed tree into a balanced BST.

Let’s now observe an example of the RL rotation:

In the above example, we can observe that the root node of the tree becomes imbalanced upon insertion of the node having the value 20. Since this is a type of RL insertion, we will perform LL rotation on the immediate parent of the inserted node thereby retrieving a right-skewed tree. Finally, we will perform RR Rotation around the edge of the imbalanced node (in this case the root node) to get the balanced AVL tree.

Hence, RL rotation consists of two steps:

- Apply LL Rotation (clockwise rotation) on the right subtree of the imbalanced node as the right child of the imbalanced node is left-heavy. This process flips the tree and converts it into a right-skewed tree.

- Perform RR Rotation (anti-clockwise rotation) on the imbalanced node to balance the right-skewed tree.

NOTE:

-

Rotations are done only on three nodes (including the imbalanced node) irrespective of the size of the Binary Search Tree. Hence, in the case of a large tree always focus on the two nodes around the imbalanced node and perform the tree rotations.

-

Upon insertion of a new node, if multiple nodes get imbalanced then traverse the ancestors of the inserted node in the tree and perform rotations on the first occurred imbalanced node. Continue this process until the whole tree is balanced. This process is knowns as retracing which is discussed later in the article.

Highlights:

- Rotations are performed to maintain the AVL Balance criteria.

- Rotation is a process of changing the structure without affecting the elements' order.

- Rotations are done on an unbalanced node based on the location of the newly inserted node.

- Single rotations include LL (clockwise) and RR (anti-clockwise) rotations.

- Double rotations include LR (RR + LL) and RL (LL + RR) rotations.

- Rotations are done only on 3 nodes, including the unbalanced node.

Operations on AVL Trees

Since AVL Trees are self-balancing Binary Search Trees, all the operations carried out using AVL Trees are similar to that of Binary Search Trees. Also, since searching an element and traversing the tree doesn't lead to any change in the structure of the tree, these operations can't violate the height balancing property of AVL Trees. Hence, searching and traversing operations are the same as that of Binary Search Trees.

However, upon the execution of each insertion or deletion operation, we perform a check on the balance factor of all the nodes and perform rotations to balance the AVL Tree if needed. Let's look at these operations in detail:

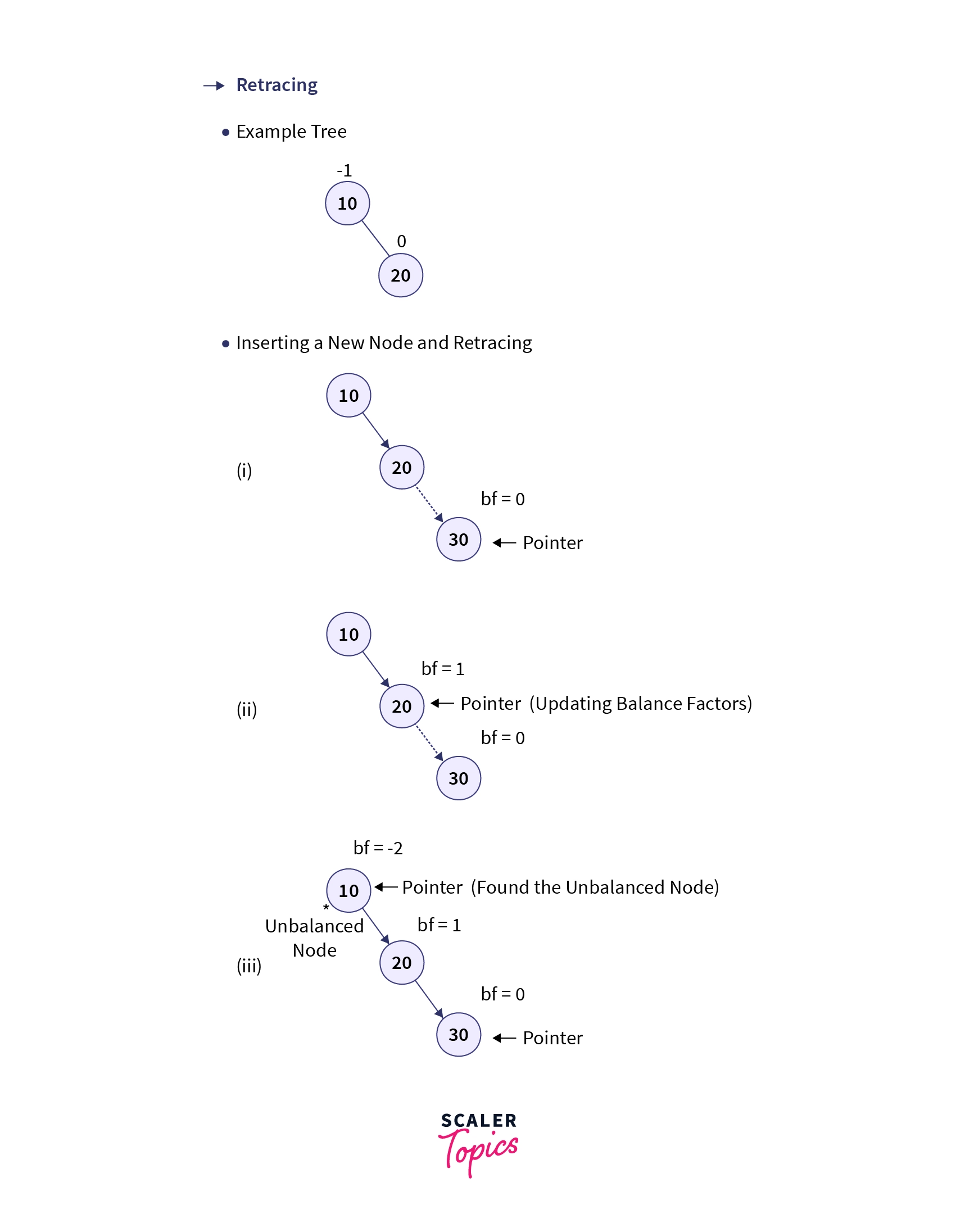

1. Insertion

In Binary Search Trees (BSTs), a new node (let's say N) is inserted as a leaf node by replacing the NULL value of a node's child. Similarly, in AVL Trees, the new node is also inserted as a leaf node, with its balance factor initially set to 0. However, after each insertion, the balance factors of the ancestors of the newly inserted node are checked to ensure tree balance. Only the ancestors are examined because the insertion only affects their heights, potentially inducing an imbalance. This process of traversing the ancestors to find the unbalanced node is called retracing.

If the tree becomes unbalanced after inserting a new node, retracing helps us in finding the location of a node in the tree at which we need to perform the tree rotations to balance the tree.

The below gif demonstrates the retracing process upon inserting a new element in the AVL Tree:

Let’s look at the algorithm of the insertion operation in AVL Trees:

Insertion in AVL Trees:

- START

- Insert the node using BST insertion logic.

- Calculate and check the balance factor of each node.

- If the balance factor follows the AVL criterion, go to step 6

- Else, perform tree rotations according to the insertion done. Once the tree is balanced go to step 6.

- END

For better understanding let’s consider an example where we wish to create an AVL Tree by inserting the elements: 10, 20, 30, 40, and 50. The below gif demonstrates how the given elements are inserted one by one in the AVL Tree:

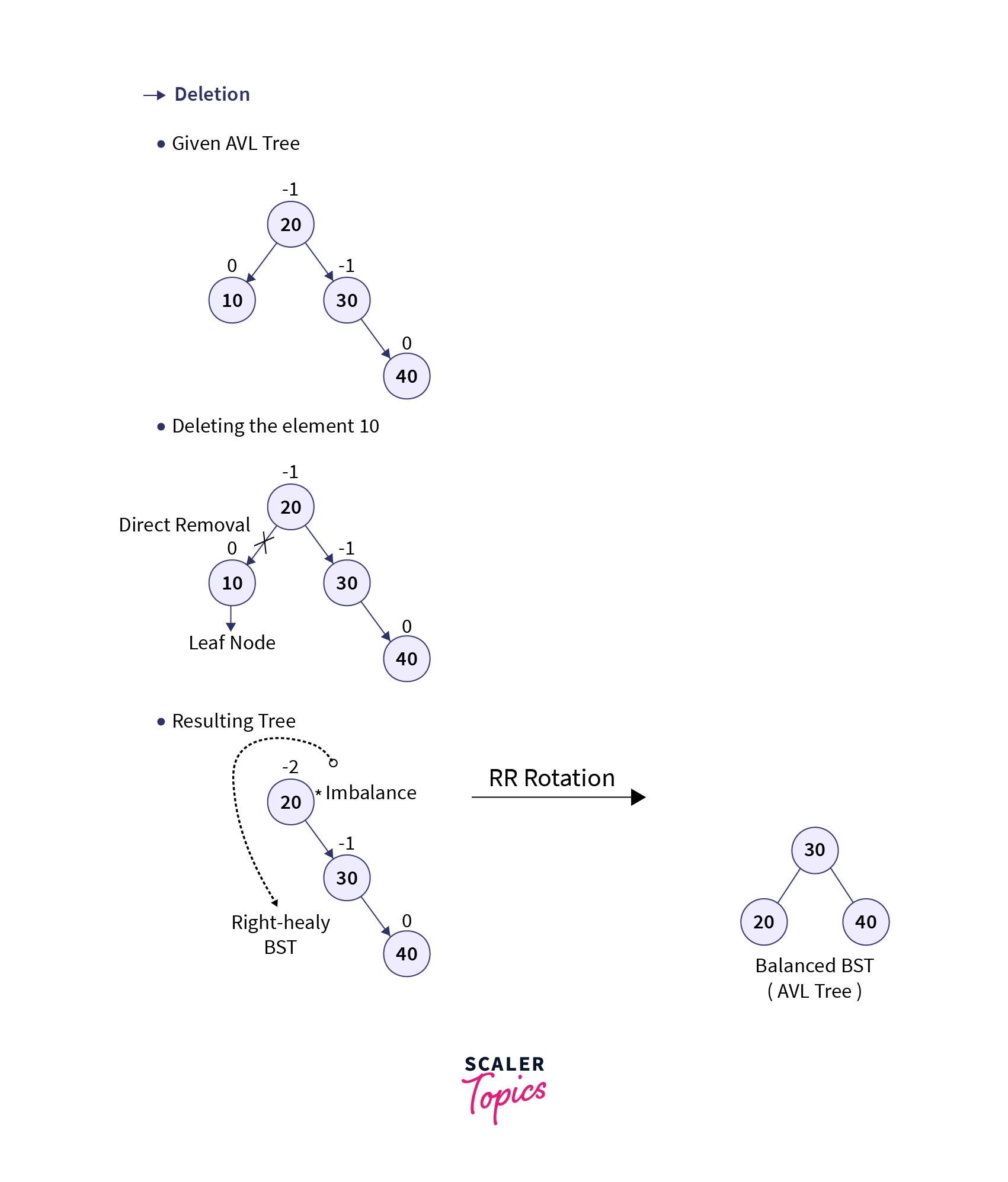

2. Deletion

When an element is to be deleted from a Binary Search Tree, the tree is searched using various comparisons via the BST rule till the currently traversed node has the same value as that of the specified element. If the element is found in the tree, there are three different cases in which the deletion operation occurs depending upon whether the node to be deleted has any children or not:

Case 1: When the node to be deleted is a leaf node

- In this case, the node to be deleted contains no subtrees i.e., it’s a leaf node. Hence, it can be directly removed from the tree.

Case 2: When the node to be deleted has one subtree

- In this case, the node to be deleted is replaced by its only child thereby removing the specified node from the BST.

Case 3: When the node to be deleted has both the subtrees.

- In this case, the node to be deleted can be replaced by one of the two available nodes:

- It can be replaced by the node having the largest value in the left subtree (Longest left node or Predecessor).

- Or, it can be replaced by the node having the smallest value in the right subtree (Smallest right node or Successor).

Just like the deletion operation in Binary Search Trees, the elements are deleted from AVL Trees depending upon whether the node has any children or not. However, upon every deletion in AVL Trees, the balance factor is checked to verify that the tree is balanced or not. If the tree becomes unbalanced after deletion, certain rotations are performed to balance the Tree.

Let’s look at the algorithm of the deletion operation in AVL Trees:

Deletion in AVL Trees

- START

- Find the node in the tree. If the element is not found, go to step 7.

- Delete the node using BST deletion logic.

- Calculate and check the balance factor of each node.

- If the balance factor follows the AVL criterion, go to step 7.

- Else, perform tree rotations to balance the unbalanced nodes. Once the tree is balanced go to step 7.

- END

For better understanding let’s consider an example where we wish to delete the element having value 10 from the AVL Tree created using the elements 10, 20, 30, and 40. The below gif demonstrates how we can delete an element from an AVL Tree:

Highlights:

- All AVL Tree operations are similar to that of BST except insertion and deletion.

- Upon every insertion and deletion, we need to check whether the tree is balanced or not.

- If required, the rotations are performed after each operation to balance the tree.

Implementation

To implement AVL Trees we will create two classes (templates of objects) i.e., the Node class and the AVL class where the node class represents the node of the tree while the AVL class describes the behavior of the AVL tree that contains various Node objects. The AVL Tree node can be represented as:

Here, the Node class consists of the integer data variable that represents the value stored in the node, the height variable that is used to determine the height of the node, the left_child and right_child variables that are used to store the left and the right children of a given node.

Now, let’s consider an example in which we will create an AVL Tree using values: 10, 20, and 30. Since these elements are in sorted order, they all will be inserted in the right subtree of the previous element. Hence, the tree will become right-skewed and an RR rotation will be required to balance the tree. The resulting AVL Tree has 20 at its root and 10 and 30 as its left and right child respectively as shown below:

Let’s understand how the above example is implemented using the OOPS concept:

- Firstly, we will create a class Node as described above to represent a node of the AVL Tree.

- Now, we will create another class AVL that represents the AVL Tree. This class will contain the following methods:

- get_height(Node object): This method will return the height of the Node object.

- get_balance_factor(Node object): This method is used to calculate the balance factor of the specified Node object.

- pre_order(Node root): This method is used to print the elements of the AVL tree using the pre-order traversal technique.

- LL_rotation(Node object): This method is used to perform LL rotation on the specified imbalanced node.

- RR_rotation(Node object): This method is used to perform RR rotation on the specified imbalanced node.

- insert(Node object, int value): It is a recursive function that is used to insert a node in the AVL tree.

Now, let's look at the implementation of the above discussed example in C++ and Java:

AVL Tree in Java

AVL Tree in C++

The output of all the above given implementations is same and is shown below:

Output:

Complexity of AVL Tree

The complexity of a data structure is measured based on the time taken by certain operations performed using that data structure and the space required to efficiently execute those operations. Therefore, the complexity includes:

Time Complexity

Let’s take a look at the time complexity of different operations performed using the AVL Trees:

Traversal Traversing is the process of accessing all the nodes present in the tree i.e., we need to visit all the nodes of the tree. Hence, for the n number of nodes present in the tree, the time complexity will always be O(n).

Searching Searching is the process of finding an element in the tree. As discussed earlier, the searching process doesn’t affect the height of the tree, Hence searching in AVL Tree is exactly the same as that in BST i.e., we always start searching from the root node and perform comparisons to find out the location of the element in the tree. But, since in AVL Trees the height is always balanced, we are reducing the comparison operations by half at each level as we traverse down the tree. Hence, the time complexity of searching operation using the AVL Trees is O(log n), where n is the number of nodes present in the tree.

Insertion Insertion is the process of adding a new node in the tree. Since we perform three sub-operations one by one in insertion, we need to consider the time taken by these sub-operations to evaluate the overall time complexity. These sub-operations include:

- Finding the location of the insertion by performing BST logic-based comparisons at each level of the tree.

- Inserting the node and calculating the balance factor of retraced nodes.

- Performing tree rotations if required.

Now, out of the above-mentioned sub-operations, The rotation process takes constant time as only 3 nodes are affected by the rotation.

Also, the balance factor calculation and the retracing process take constant time. Hence, the time complexity of insertion depends only upon the process of finding the location of insertion which depends on the height of the tree (discussed earlier in the article). Therefore, the time complexity for insertion in AVL Tree is O (log n) as the AVL Tree is height-balanced and attains minimal height i.e., O (log n).

Deletion Deletion is the process of removing a node from the tree. Similar to insertion, in the deletion process we also need to search the tree to locate the node to be deleted, then calculate the balance factor for retraced nodes, and perform rotations if required.

Hence, deletion operation also depends on the searching time i.e., the time complexity for deletion from an AVL Tree is also O (log n), where n is the total number of nodes present in the tree.

Space Complexity

The AVL Trees can be considered as a Binary Search Tree having height-balancing property. This height-balancing property is achieved by changing the structure of the BST using tree rotations. Hence, in AVL Trees we are only changing the structure of the data and not the data itself.

Hence, its space complexity is the same as that of Binary Search Trees i.e., O (n) as the BSTs can be visualized as a sorted linear array data structure having n number of elements.

Highlights:

- AVL Trees are always balanced i.e., their height remains O(log n).

- AVL Trees can balance themselves by only changing the structure of the tree.

- Because of AVL Trees' balanced height, the time complexity of certain operations are:

Operation Average-Case Worst-Case Traversal O (n) O(n) Searching O (log n) O(log n) Insertion O (log n) O(log n) Deletion O (log n) O(log n) - Space complexity is same as that of BSTs i.e., O (n) as AVL Trees doesn't modify the data itself.

Advantages of AVL Tree

AVL Trees were developed to overcome the dependency of operations on the height of the Binary Search Tree and the inability to control the tree's height. Hence, the main advantage of AVL Trees include:

- It can be used to perform searching operations on dynamic data sets, just like Binary Search Trees.

- Its height is always balanced i.e., it always stays as a balanced BST which has minimal or logarithmic or O(log n) height, where n is the total number of nodes present in the tree.

- It has a self-balancing property which is implemented by calculating the balance factor of all the nodes after each operation and performing certain Tree rotations to make sure that all the nodes follow the AVL Balancing criteria.

- Because of the self-balancing property, the AVL Tree remains height-balanced and it performs way better than BSTs by performing operations in logarithmic time complexity, especially when the number of nodes present in the tree is very large.

- Because of the logarithmic time complexity, the searches performed using AVL trees are faster and more reliable than that of BSTs.

Highlights:

- Can be used to search dynamic datasets

- Maintains its height by performing rotations whenever the balance factor of a node violates the Balancing Criteria. As a result, it has self-balancing properties.

- Exists as a balanced BST at all times, providing logarithmic time complexity for operations such as searching.

- Provides faster and reliable searching than BSTs, especially when the number of nodes present is very large.

Conclusion

- AVL Tree is a heigh-balanced BST that maintains its height by performing tree rotations according to a Balancing Criteria.

- Balancing Criteria: For all nodes, the subtrees’ height difference (balance factor) should be at most 1

- AVL Tree height is always O(log n) i.e., it has logarithmic time complexity for all the operations.

- Tree Rotations are changes in the structure of the tree, done only on 3 nodes without affecting the elements' order.

- All AVL Tree operations are similar to BST except insertion and deletion.

- When N is large, AVL provides faster and reliable search operation than BST.