Master's Algorithm

Learn via video course

Abstract

Master's Theorem is the best method to quickly find the algorithm's time complexity from its recurrence relation. This theorem can be applied to decreasing as well as dividing functions, each of which we'll be looking into detail ahead.

Scope

- This article starts with the need for the Master's Theorem and the definition for the same.

- Master's Theorem can be applied to 'dividing' and 'decreasing' recurrence relations. Both of which are discussed in detail.

- Further, it covers important cases that cover the computation involved in the Master's Algorithm.

- To understand any logical theorem, we need to understand how we reached that result. Hence, the idea behind the Master's Theorem for dividing functions is discussed in detail. Each term in the recurrence form and each comparison are explained in detail.

- Master's Theorem is made easy for the reader by explaining the proof and solving Master's Theorem examples for both dividing and decreasing functions.

- Every Theorem or method comes with its underlying limitations, which are important to understand to get the loopholes so that they can be avoided.

- In our Data Structures and Algorithms course, we thoroughly explore the Master's Theorem, providing a detailed understanding of its underlying idea, proof, and practical application. We also highlight the limitations of various theorems and methods, enabling learners to identify and address potential loopholes effectively.

Introduction

The most widely used method to analyze or compare various algorithms is by computing their time complexities. As the algorithm gets complex, the time complexity function calculation also complexifies.

Recursive functions call themselves in their body. It might get complex if we start calculating its time complexity function by other commonly used simpler methods. Master's method is the most useful and easy method to compute the time complexity function of recurrence relations.

We can apply Master's Theorem for:

- Dividing functions

- Decreasing Functions

Master's Method for Dividing Functions

Highlights:

- Used to directly calculate the time complexity function of 'dividing' recurrence relations of the form:

- =

- where =

- Compare and to decide the final time complexity function.

Master's Theorem is the most useful and easy method to compute the time complexity function of recurrence relations.

Master's Algorithm for dividing functions can only be applied on the recurrence relations of the form:

= ,

where

for example:

- = +

- = +

where,

n = input size (or the size of the problem)

a = count of subproblems in the dividing recursive function

n/b = size of each subproblem (Assuming size of each subproblem is same)

where the constants a, b, and k are constants and follow the following conditions:

- a>=1

- b>1

- k>=0

Master's Theorem states that:

- Case 1) If then:

- = θ

- Case 2) If , then:

- a) If p>-1, then =

- b) If p=-1, then =

- c) p<-1, then =

- Case 3) If , then:

- If p>=0, then =

- If p<0, then =

The same can also be written as:

- Case 1- If , then:

- = θ

- Case 2- If , then:

- a) If p>-1, then = θ

- b) If p=-1, then

- c) If p<-1, then

- Case 3- If , then:

- a) If p>=0, then

- b) If p<0, then

Hence, using the values of a, b, k and p, we can easily find the time complexity function of any dividing recurrence relation of the above-stated form. We'll solve some examples in the upcoming section.

Master's Theorem for Decreasing Functions

Highlights:

- Used to directly calculate the time complexity function of 'decreasing' recurrence relations of the form:

- = +

- =

- The value of 'a' will decide the time complexity function for the 'decreasing' recurrence relation.

For decreasing functions of the form ,

where =

for example:

where:

n = input size (or the size of the problem)

a = count of subproblems in the decreasing recursive function

n-b = size of each subproblem (Assuming size of each subproblem is same)

Here a, b, and k are constants that satisfy the following conditions:

- a>0, b>0

- k>=0

Case 1) If a<1, then

Case 2) If a=1, then

Case 3) If a>1, then

Hence, given a decreasing function of the above-mentioned form, we can easily compare it to the given form to get the values of a, b, and k. With that then, we can find the time complexity function by looking at the three possible cases mentioned above. We'll solve some examples in the upcoming section to understand better.

Idea Behind Master's Theorem for Dividing Functions

Highlights:

- To calculate and compare it with to decide the final time complexity of the recurrence relation

The idea behind Master's algorithm is based on the computation of and comparing it with . The time complexity function comes out to be the one overriding the other.

For example:

- In Case I, dominates . So the time complexity function comes out to be

- In Case II, is dominated by . Hence, the function will take more time, and the time complexity function, in this case, is , i.e., .

- In case III, when , the time complexity function is going to be

Now, Why do We Compare with and not Some Other Computation?

Given = + , we need to calculate the time complexity of . If we calculate the time taken by both the terms individually and compare as to which one dominates, we can easily define the time complexity function of to be equal to the one that dominates.

To achieve so, let's first understand the first term, i.e., , and then, we will calculate the time taken by this term.

Understanding the term - means - For our problem of size n, we divide the whole problem into 'a' subproblems of size 'n/b' each. Suppose ^'^ is the time taken by the first term.

Hence,

=>

can again be divided into sub problems of size each.

Hence,

=>

Similarly,

=> and so on.

to

=>

Let's assume, (When we divide a problem to 1/2 each time, we can say that . Here we divide the problem with b each time, hence, )

=>

=>

At ith level, the size of the sub-problem will reduce to 1, i.e. at ith level,

=>

=>

=> ,

Therefore, i = k at the last level, where the size of the sub-problem reduces to 1.

Using this deduction, we can re-write as:

=>

=>

=>

Hence,

was assumed to be the time complexity function for the first term. Hence proved that the first term takes time complexity. That is why we compare (i.e. the first term) with f(n) (i.e. the second term) to decide which one dominates, which decides the final time complexity function for the recurrence relation.

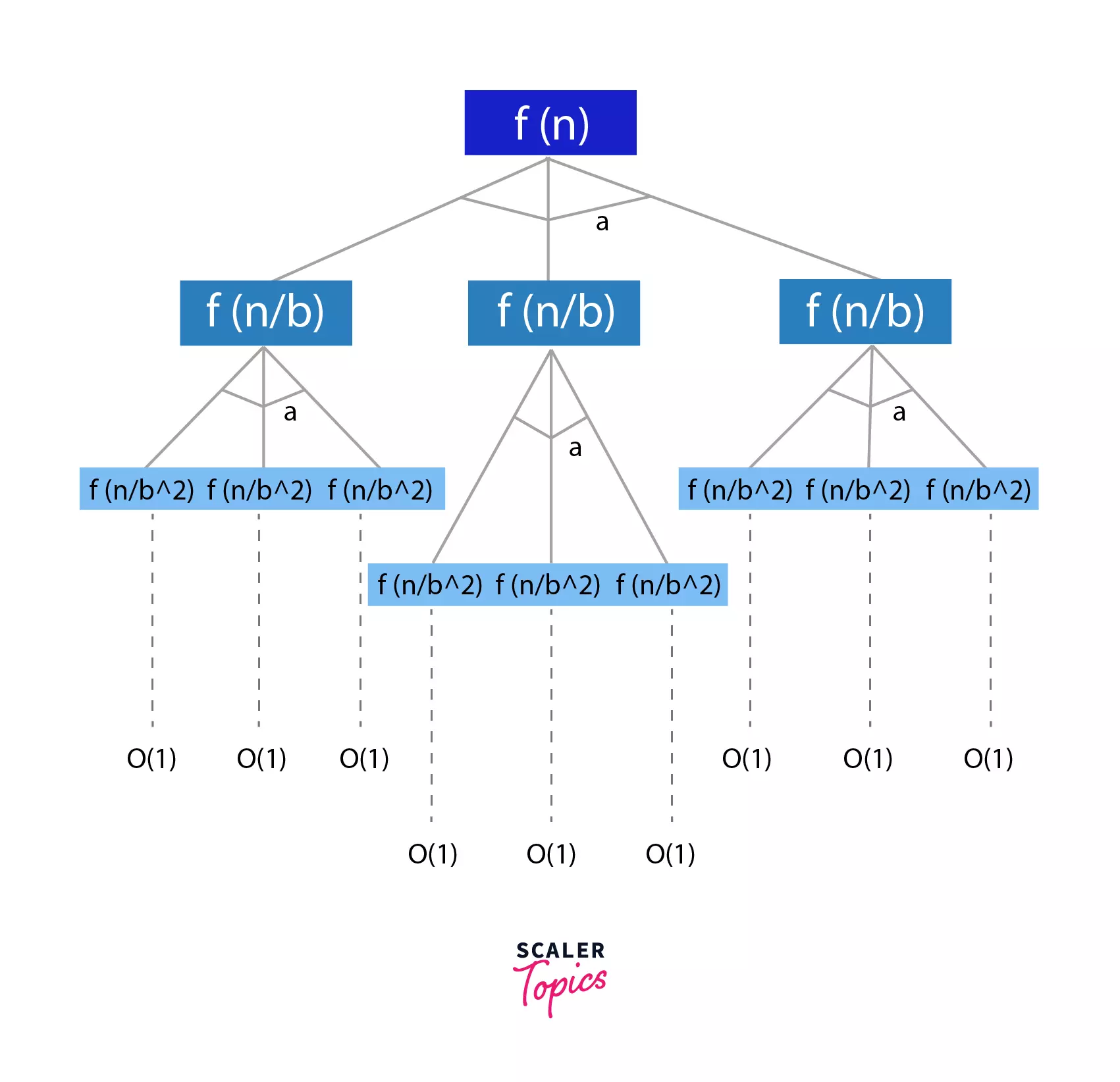

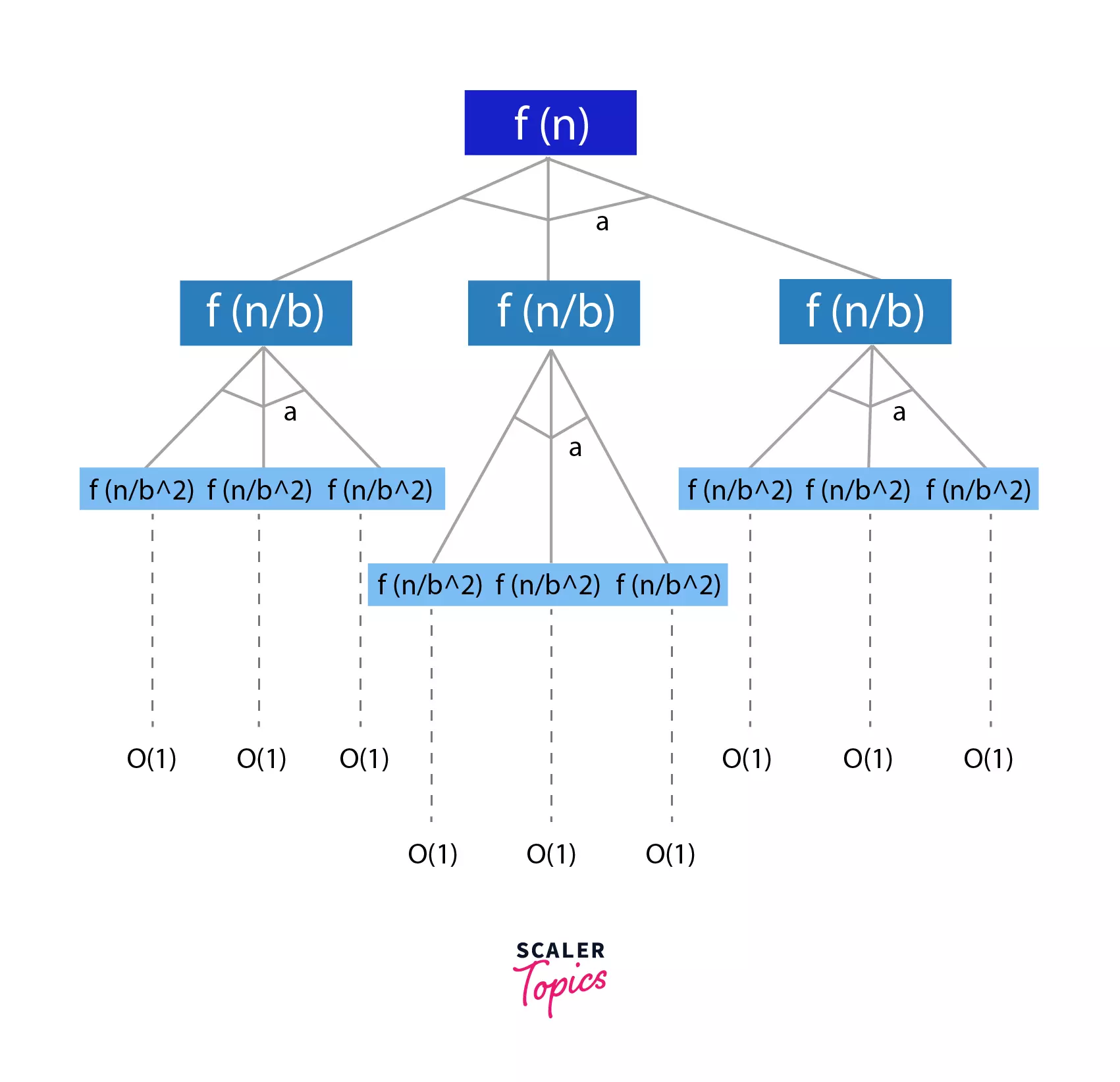

Proof of Master's Algorithm

This form of recurrence relation is in the form of a tree, as shown below, where every iteration divides the problem into 'a' sub-problems of size (n/b).

At the last level of the tree, we will be left with problems of size 1. This is the level where we have the shortest possible sub-problems.

The level =

Size of sub-problem = 1

Let us now calculate the work done at each level and that will help us compute the total work done, i.e. the sum of work done at logbn levels.

- Work done at level 1 =>

n^d^ comes from the recurrence relation - Work done at level 2 => => At second level, the problems are divided into size n/d, and there are 'a' sub-problems.

- Work done at level k =>

- Hence, Total Work Done => .

Note: This Summation forms a Geometric Series.

Now, let us look at every single case and prove it.

Proof of Case I: When

- In this case the work done is increasing as we move down the tree, i.e. towards the lower levels of the tree, with more sub-problems.

- Also, the total work done is an expression of Geometric Series, and in this case, r<1. Hence, the last term of the geometric series will have the maximum effect.

- Hence, the total work done will only have the last term, since, all the other terms of the geometric series can be ignored as compared to the last one.

- Hence, we can substitute k = log

bn in the time complexity expression.

=>

=>

=>

=>

Proof of Case II: When

- This means that the work done by each term in the geometric series is equal.

- Hence, the total work done is work done at each level * Number of levels.

=>

=>

=>

Proof of Case III: When

- This is exactly the opposite of the first case discussed.

- This is the case of decreasing Geometric Series. Hence, the first term is the maximum term and the major term to be considered.

- Hence, the total work done = first term, i.e. O(n^d^)

Hence, Proved!

Examples of Master's Theorem for Dividing Function

We'll now solve a few examples to understand Master's Theorem better. Before solving these examples, remember:

Master's Theorem can solve 'dividing' recurrence relations of the form , where

where the constants a, b, and k follow the following conditions:

- a>=1

- b>1

- k>=0

Example 1:

After comparison, a = 1, b = 2, k = 2, p = 0,

- Step 1: Calculate and k

- k = 2,

- Step 2: Compare with k

- (Case III)

- Step 3: Calculate time complexity

- If , then

Final Time complexity: θ(n^2^)

Example KaTeX parse error: Expected 'EOF', got '√' at position 14: 2: T(n) = 2T(√̲n) + logn

This recurrence relation function is not of the form , but can be converted to this form and then, solved with Master's Theorem. Let us convert the function form:

Let

On replacing with everywhere, we get:

Suppose

=>

Finally ,we have this equation of the form T(n) = aT(n/b) + f(n), where a = 2, b = 2, k = 1, p = 0, and f(m) = m

- Step 1: Calculate and k

- k = 1,

- Step 2: Compare with k

- (Case II)

- Step 3: Calculate time complexity*

- If p>-1, then

- Step 4: Replacing m with

Final Time complexity:

Examples of Master's Theorem for Decreasing Function

We can solve 'decreasing' functions of the following form using 'Master's Theorem for Decreasing Functions'. Form: T(n) = aT(n-b) + f(n), where f(n) = θ(n^k)

Here a, b, and k are constants that satisfy the following conditions:

- a>0, b>0

- k>=0

Example 1:

After comparison,

- Step 1: Compare 'a' and 1

- Since a>1,

- Step 2: Calculate time complexity

- Putting values of a = 2, b = 2, f(n) = n.

Final Time complexity:

Example 2:

After comparison, a = 1/2, b = 1, k = 2,

- Step 1: Compare 'a' and 1

- Since ,

- = θ(a^k)

- Step 2: Calculate time complexity

- Putting values of a = 2,and k = 2.

Final Time complexity: θ(n^2^)

Limitations of Master's Method

Highlights:

Relation function cannot be solved using Master's Theorem if:

- T(n) is a monotone function

- a is not a constant

- f(n) is not a polynomial

Master's Theorem can only be used for recurrence relations of the form:

- = + , where (Dividing Recurrence Relation) Or,

- = + , where (Decreasing Recurrence Relation)

This marks the limitations of Master's Theorem, which cannot be used if:

- T(n) is monotone function, For example: =

- a is not a constant. Consider this: = . This function cannot be solved to get the time complexity functions because that changes our comparison function(as discussed above). We compare with for the final dominating time function.

If a is not constant, this changes our Master Theorem's conditions altogether.

- If is not a polynomial. Again for similar reasons, the Master's Theorem can only be applied to the above-stated form under the given constraints.

Conclusion

-

For the recurrence relations of the type

- , where (Dividing Recurrence Relation) Or,

- , where (Decreasing Recurrence Relation)

where,

n = input size

a = count of subproblems in the recursion function

n/b = size of each subproblem (Assuming size of each subproblem is same)

can be solved using Master's Theorem, and we can directly calculate the time complexity of such relations.

-

Necessary constraints applied to this form are:

- a>=1

- b>1

- k>=0

and

- a>0,

- b>0

- k>=0

respectively.

-

The final time complexity of this form is found out by comparing the time complexity of the first term, i.e., aT(n/b), and the second term, i.e., f(n) in 'dividing functions' and 'a' with 1 in the case of 'decreasing' functions.

-

We cannot apply this Theorem to relation function where

- a is a constant,

- T(n) is a monotone function

- f(n) is not a polynomial