What is Data Structure?

Learn via video course

Overview

A data structure is a meaningful way of arranging and storing data in a computer so as to use it efficiently.

Takeaways

What is Data Structure? Data structures provides means for management of large dataset such as databases or internet indexing services.

Introduction to What is Data Structure

a real-life analogy...

If you go out shopping at a grocery store, you would expect related items to be in the same section, such as the fruit and vegetable section, the meat section, the dairy section, etc.

These items can be further organized according to their prices and so on.

Such meaningful structuring of items helps the business operate efficiently. Because you (or anyone) would never visit (or re-visit) a messy store where it takes forever to find each of your required items. 😔

Likewise, you wouldn't want to use a slow computer where searching for an old photo takes around a day. That's why we have an efficient data structure called the ‘file system’ that organizes and stores said data into a hierarchy starting from the root directory.

Though file system is a much more advanced data structure, it does try to solve the fundamental issue that any data structure tries to tackle i.e. efficient way of storing, organizing, and maintaining data.

Now, before proceeding further, we need to make sure we are on the same page when talking about certain terms.

Basic Terminology

-

Data - represents an atomic value or collection of facts that could lead to contextual information e.g. a unit value 40 or a collection such as [(35, 19), (35, 18), (30, 18), (29, 18), (29, 17)] doesn't make sense in isolation but are referred to as data nonetheless. It's only when we associate respective contexts like the price of apples per kg. or 5-day temperature forecast, do we harness information out of the raw data.

-

Database - an organized record of data so as to use it efficiently, nevertheless usually stored in hard disk or permanent memory as opposed to data structures being stored usually in RAM or volatile memory.

-

Algorithm - is a step-by-step set of instructions for doing stuff such as making an omelet, playing rugby, checking primes, and reading this article. From washing machines to self-driving cars to every deterministic action ever taken can be expressed as algorithms.

-

Asymptotic Complexity - determines how fast an algorithm can compute (with respect to input) when applied over a data structure. Check here to learn more about it since an in-depth discussion is much needed for the uninitiated but it's out of the scope for this article.

It's important to note that we will mainly discuss non-primitive data structures; what I mean by that you shall see! 😀

Types of Data Structures

You probably heard about data structures in association with an array or a linked list.

But what makes an array an array, and how is it any different from a linked list or a tree?

How many different data structures are out there in the wild? 🤨

Well, I am here to tell you that there are mainly two types of data structures, namely

The primitive data structure, also known as built-in data types can store the data of only one type. You know the integers, floating points, characters, pointers, stuff like that.

Non-primitive data structures, on the other hand, can store data of more than one type. For example, array, linked list, stack, queue, tree, graph, and so on. These are often referred to as derived data types.

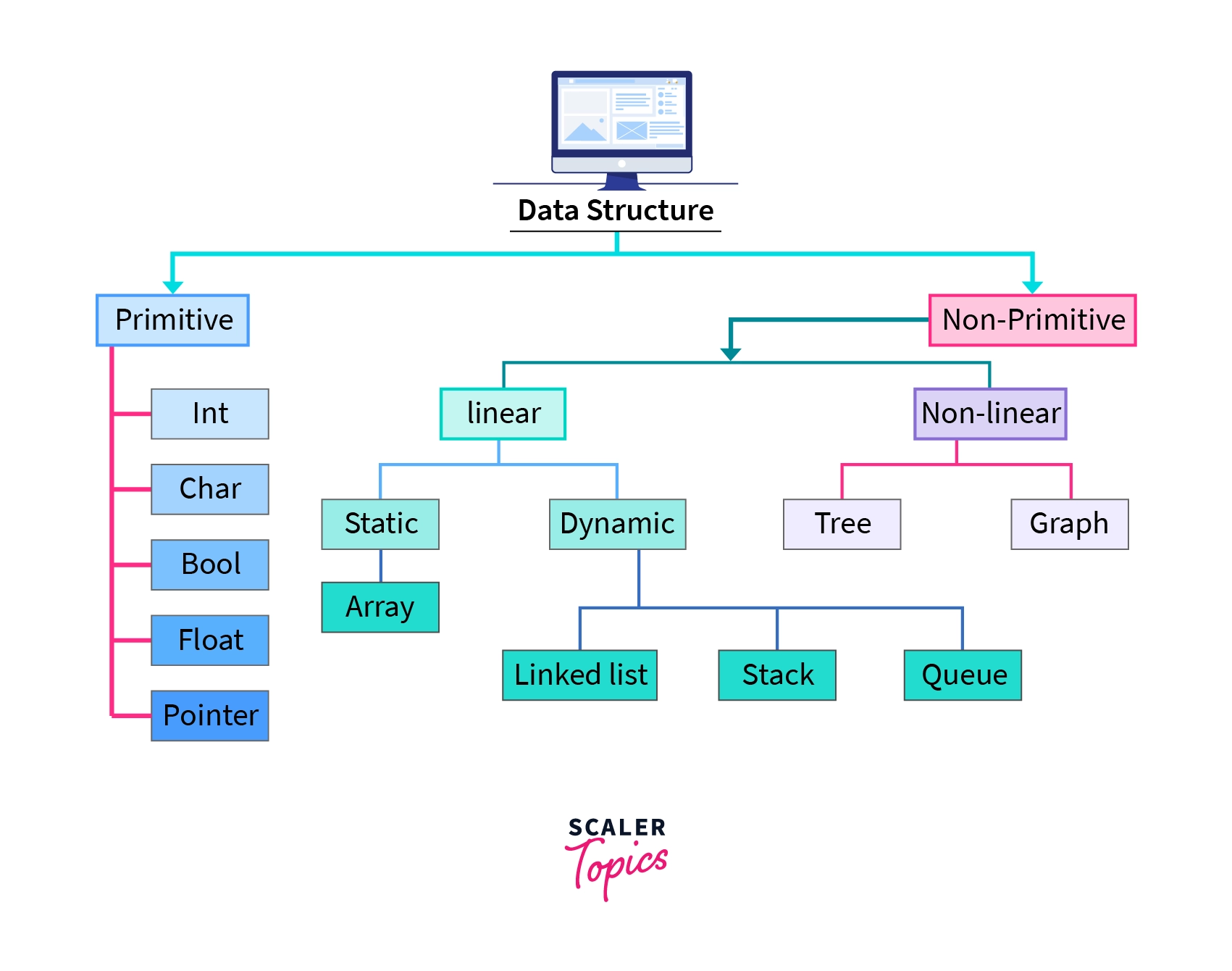

Let's have a look at the big picture

As you can see, the non-primitive data structures are further classified into linear and non-linear.

Linear data structures are again categorized based on whether they are static or dynamic.**

And if that's not enough, stacks and queues are also interchangeably referred to as ADT and not concrete data structures!

Wait a minute, what! 😯

Let's take a step back, and first, discuss linear vs non-linear.

Linear data structures

As the name suggests, its data have to be structured in a linear order. That means there is no hierarchy, and elements are held together sequentially either by pointers or contiguous memory locations. In layman’s terms, each element appears to be connected with one another in a linear fashion.

Let's take a scenario, say we want to store the prices of all the groceries that we purchased. So we can allocate a contiguous memory block of size 'n', where 'n' refers to the number of items we purchased.

Array

Here, an array could be a suitable data structure as it's just a collection of similar data types like int, float, char, etc.

Since the prices are likely to be floats, we could store these in an array, like so:

The coolest thing about an array is it provides you constant access to each element by means of zero-based indexing. 🙅🏻

In order to access the price of the third element, we could say prices[2].

Now, how about storing the prices of all the groceries that we are about to buy but don't know the quantity in advance!

Since the array requires you to specify the size in advance, here such an attempt would be futile.

In the previous case, notice we had to declare the size by specifying n = 4. I mean you could argue for creating a large enough array so that we can never run out of spaces.

For example, setting the size of an array to the total number of items in the store or something. Nevertheless, intuition suggests that would be highly inefficient. Also, remember, the sole purpose of data structure is to increase efficiency by means of carefully organizing data.

Linked List

Instead of allocating the contiguous memory blocks in advance, how about we wrap our data in a node that spits out a reference that we can use to point to the next node.

Voila, we have a list of linked nodes vis-a-vis linked list. Alas, we lose indexing!

And the more we talk about data structures, the more you will see that there's no silver bullet, instead, everything's a tradeoff.

To learn more about linked lists, you can check out this article. 😉

Linear data structures are mainly classified into two categories, static and dynamic.

- Static data structures - Here the size of the data structure is allocated in the memory during the compile-time thereby rendering the allocated size fixed. This results in the maximum size of such data structures needing to be known in advance, as memory cannot be reallocated at a later point. That’s why they are called static.

- Dynamic data structure - Here the size is allocated at the runtime which makes it flexible. As the memory can always be reallocated depending on the requirements, these data structures are called dynamic.

I think, suffice to say, an array is a static data structure whereas a linked list is dynamic!

Non-linear Data Structures

Non-linear data structures are not arranged sequentially in that each element of such data structures can have multiple paths to connect to other elements or form a hierarchy.

Let's go back to the example of the file system. Visualizing it gives an impression of a upside down tree-like structure that preserves hierarchy in the form of the parent folder, child folder, and so on.

So, how can we build such a file system using data structures?

Obviously, by using a tree-like data structure aka trees (pun intended). 🌲

Tree

Trees are indeed one of the most important data structures with a hierarchical relationship among its elements aka nodes which are connected by links aka edges. If it helps, think of a tree as a linked list but with two or more pointers.

However, unlike a circular linked list, a tree doesn’t have any cycle, ♻

Because it doesn't make any sense if we could circularly navigate our directory like so,

For instance, searching for a pic that didn't exist in the computer, to begin with, would never terminate and crash the whole system.

For instance, searching for a pic that didn't exist in the computer, to begin with, would never terminate and crash the whole system.

But there could be instances where we do need such cycles or the situation could be a tad bit complicated.

In the context of social media, an expression like this doesn't seem unheard of;

'I'm Jane, a friend of a friend of Joe, and since you also follow Joe, I was thinking maybe we can be friends, please accept my request...'

What data structure can model these intricate relationships? 🔍

Graph

A graph contains nodes and edges much like a tree but far more superior and versatile. It can be so powerful that it has a dedicated branch named 'Graph Theory’ in mathematics. 😋

A graph can have cycles, disjoints, and all kinds of flexibility on top of a tree. Hence, it's important to remember that a tree falls under a special category of graphs.

Whew, that was a lot...

I hope you have got some clarity on the major distinctions between linear and non-linear data structures.

But it doesn't stop here, we can mix-match and further build more and more niche data structures by implementing the generic counterparts.

For example, a hash map is perhaps the most useful (disputable) data structure that implements an associative array abstract data type, a structure that can map keys to values. Hash maps, in turn, are often built on top of a linked list. 👁

A linked list can also directly implement other abstract data types such as stack and queue.

But what is this abstract data type? 🤨

Abstract Data Type (ADT)

In computer science, we often talk about abstraction and programming to an interface.

For instance, you don’t need to know automobile engineering to drive your car to the grocery store. You just have to know how to drive since the manufacturers already abstracted away all the intricacies of the car engine and other internal mechanisms.

And for driving, you do get interfaces such as a steering wheel and a gearshift in order to interact with the car.

Likewise, every data structure has a corresponding interface known as Abstract Data Type or ADT. Simply put, an abstract data type only addresses the interface, and data structures implement that interface.

Queue

For instance, a queue is an ADT that must maintain the First In First Out (FIFO) ordering of elements. It simply means, the first person to get in the queue, would be the first person to get out of the queue.

This idea of a queue ADT might have been inspired by a real-world standing queue in front of a grocery store counter or similar scenarios.

Stack

On the other hand, Stack is another example of ADT that conforms to the Last In First Out (LIFO) ordering of elements.

This idea of this ADT is probably inspired by a real-world stack of books. 📙

Basic Operations

We have covered a lot of ground by discussing several data structures such as array, linked list, stack, queue, tree, graph, hashmap and probably hinted at their respective use-cases but never really got to the specifics.

Like, how do they operate?

Well, it turns out almost all data structures support a few key operations... which we are about to explore next!

Search

Hands down, the most useful operation! If you cannot search an element, how are you ever gonna be able to do anything?

Okay if that sounds vague, think of it like this — you are at a grocery store (for some reason I'm obsessed with grocery) and you are putting items into a cart.

Here, an item like Rs. 50 chocolate bar would be your data and the cart itself is a data structure. Let's assume you forgot afterward if you had picked the chocolate bar or not.

How can you ever be sure if you are unable to search? 🔎

Now, you may claim your memory is sharp (unlike me) and you remember putting the item in the cart but then how can you show it to the counter?

How can you ever access it if you cannot search for it? (Thoughtful exercise, give it a shot! 💡)

Traverse

Traversing means iterating through a data structure in some particular order.

In an array, you could access an element in constant time i.e. O(1) provided you knew its position beforehand!

But that doesn't mean you can search for it in constant time.

You still need to start from the first index and search for it. Hence, in order to search you need to traverse. 🏃♂️

So, we conclude that traversal is also as useful as searching.

Insert

Insertion basically means to insert one or more elements into your data structure. Elements can be inserted at the beginning, end or at any specified position.

In some data structures, you don’t even need to specify a position e.g. insertion in a heap.

Insertion is a fundamental operation because if you cannot insert anything into your data structure, why bother having one?

A cart 🛒 exists for a reason to help facilitate storing items for purchase. In order to store it, you must insert it.

Delete

Once we are done with our shopping, we need to delete items from the cart, show them to the counter, and put it into our bag (another data structure).

Now, imagine we didn't have this operation so we would never be able take any items out of the cart ever.

Seems like a miserable world I don't wanna live in!

But you can argue, you don't need to delete a number in an array to use it!

Because we can access an element like this:

Well, you got me there!

So, depending on the chocolate_bar's data type, there are two possible scenarios:

- accessing it creates another copy of the chocolate bar and then you eat it but it's highly unlikely because it violates the law of conservation of energy.

- eating the chocolate bar from the cart itself and leaving just the wrapper behind.

The second scenario is practical but highly inefficient which contradicts the existence of data structure.

I mean you can do that but chances are you are much better off by using a cart that supports deletion and you can use other data structures like a plate to eat 🍽 and a garbage bin 🗑 to dispose of the wrapper.

Update

This one builds on top of searching and inserting e.g. you suddenly realized you've purchased the wrong chocolate bar so you search the wrong bar in the cart and replace it with the correct bar.

Now you might say, replacing could be also be considered an operation that builds on top of deletion and insertion.

But it's not!

I know it's weird, I mean talk about violating conservation laws left and right!

But here we reach the limit of our analogy of the physical world with that of the binary!

When I say replace, I mean magically making the older bar disappear and changing its place with the new one by doing:

Here we just searched the cart and then found it to be on position 35 and replaced it with a better_chololate bar. ✍🏻

Sort

This is a quite advanced data structure operation and an array is highly efficient for this kind of operation due to its constant access and linear ordering.

Linked lists can also be sorted by manipulating pointers and so can be trees.

A special sorted binary tree known as the Binary Search Tree is one of the most useful data structures out there.

I mean, you can imagine and probably appreciate a grocery store for having all its similar items sorted in an aisle according to their prices from left to right.

So, if you are craving an expensive chocolate bar you know exactly in which direction to look! ➡️

Merge

This is not a fundamental operation and in fact, it uses other basic ones to help facilitate the said process e.g. merging two linked lists into one by manipulating pointers, or two BSTs into one.

To put into perspective, putting the items from two carts into a larger one could be a case for merging. 🛒➕🛒= 🛒

Merging is also used for one of the most well-known sorting algorithms, namely merge sort (how original).

Advantages of Data Structures

None of my previous rants would make sense if there weren't inherent benefits for using a data structure.

But I must tell you that these advantages are generic and conformed by every single data structure, so let's check them out:

-

Efficiency - All these various data structures exist for a reason and that is to facilitate efficient storage and retrieval of data for various use-cases.

For example, searching for a value by its key is better suited for hash maps than an array.

A tree is more likely to be efficient to model any file system but not efficient for representing relations in social media applications.

-

Abstractions - Every data structure provides clean interfaces in the form of operations exclusive to their respective abstract data types, which makes their internal implementation hidden from developers.

You don't need to know how STL's unordered_map works under the hood to write an application using it in C++, you just need to know what operations it supports and how you can use that to your advantage?

-

Composition - Fundamental data structures can be combined to build more niche and complex data structures.

In a database management system, indexing is usually implemented using a B+ tree which is based on top of the B tree - a special kind of n-ary self-balancing tree data structure.

In fact, you can think of the database itself as this huge composite data structure capable of storing data even when a program has run its course.

Why do we need to learn Data Structures❓

That's a reasonable question because many things in life are important but not everyone needs to learn everything. 🤗

Having said that, if you aspire to become a software engineer or an ML engineer or a data scientist or pursue a Ph.D. in computer science, you need to know your data structures (and also algorithms to work with those data structures).

The necessity of knowing data structures could also be a self-fulling prophecy, albeit for wrong reasons, nevertheless every bit happening.

Let me parse it for contextual ease of understanding —

- Data structures are important, so companies ask them in the interview, and to pass it we must learn it. But another way of looking at this is — data structures are important, so we must learn them to improve our problem-solving skills and become better at our respective jobs or cracking technical interviews.

Whichever lens you pick is up to you but it doesn't take away from the fact that data structures are indeed one of the most important topics that have massive implications in software and computer science (and technical interviews).

In this article, we mostly talked about data structures but if you want to know why we need to learn algorithms as well -- perhaps you could check this article -

Why learn data structures and algorithms?

That article dives much deeper into answering the question.

Conclusion

Let's take a brief pause and reflect on what we have seen so far! 😇

TLDR;

- We learnt What is Data Structure: data structure is a meaningful arrangement of data for its efficient storage and use.

- there are two categories namely primitive and non-primitive.

- non-primitives are further classified into two types - linear and non-linear.

- linears are again divided into two kinds - static and dynamic.

- dynamics are again divided into - nah just kidding!

- array, linked list, stack, queue fall into the linear category.

- trees and graphs are non-linear.

- stacks and queues are interchangeably and rightfully called ADTs.

- data structures support 7 major operations namely, searching, traversing, inserting, deleting, updating, sorting, and merging.

- such operations lend to several advantages due to a strong focus on efficiency, abstraction, and composition.

- data structures are quite important for cracking technical interviews for big tech companies.

Whewie… 😅 we covered a lot in this article and if you can answer...

I encourage you to go ahead and pick one data structure and learn it well and also practice problems that can be solved using it.

You might wanna start with simpler ones and then slowly work towards more complicated data structures.

Oh and I also remembered that I have yet to purchase this week's grocery! 😫