ETL Testing

Learn via video courses

Overview

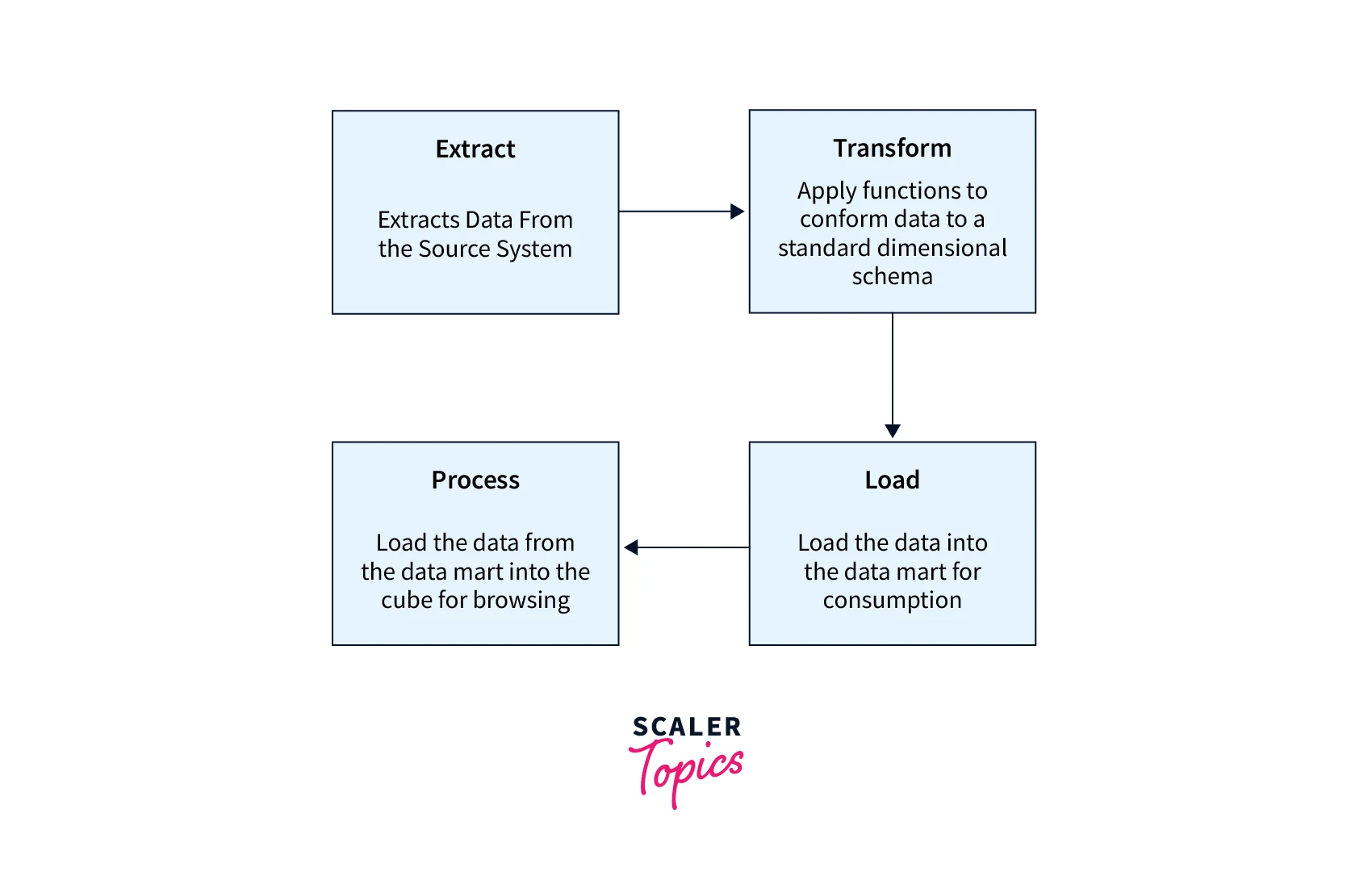

The most crucial element for any product is data. Just storing the data is not enough, one should be able to manipulate data and extract information from it as well. ETL stands for Extract-Transform-Load, where data is taken from the source, verified, cleaned, transferred, and loaded to other applications.

What is ETL Testing?

ETL Testing stands for Extract-Transform-Load Testing. Nowadays, in the industry, there is a famous architecture called MVC, which stands for the Model View Controller. Where the controller acts as middleware. Data is taken from the database, which is mapped to a model schema for the data. Now that data in the controller is further cleaned and processed. That processed data is sent to the front end via API.

For example, there is a tutorial site and the user registers on the site, then data is stored in the database as full name, DOB, education qualification, etc. Now Admin wants to see on its portal - how many users registered today. This data from API goes to the backend, where the date filter is passed. That filtered data which we have after the query is sent to UI where the admin can see the final count.

In ETL testing, this entire process, from storing the data to validating it, cleaning it, verifying, and ultimately manipulating the data to extract results, comes.

- Extract: Get the data from the table in the data source or from an excel sheet.

- Transform: Validate the data is stored correctly. Validate the schema, normalization is maintained, and keys are used. Clean the database if required. All these operations are done directly in the database. Hence, the backup must be maintained. Also, deleting or editing an entry directly in the database is prohibited, so that must be taken care of. Database management systems also create metadata that can also be used.

- Load: Load the data into the data warehouse, where all the raw data from all the sources is kept. An aggregate of all the data is kept in the warehouse.

Process for ETL Testing

ETL testing is a 5 stage testing process. Testing is done at each stage of ETL.

1. Maintain the data sources: Data generates at various stages in the product. The user might be uploading an excel sheet, data backups getting maintained, the actual database, database credentials, etc.

2. Data acquisition: Creating an API to transfer the data to the database, connecting both the endpoints of the application and database, sending GET/POST requests, and ensuring encryption and data security.

3. Dimensional Modelling: Implementing the business logic that is the architecture of the entire database management system and creating schema structure, Keys, backups, rollbacks, maintaining atomicity of transactions, and how data would be mapped to the controller via models. They ensure the data synchronization on the model and database of complicated data types like date and time.

4. Populate the data: Populate the data, and create an API to push the data to the database. If the data is in an excel sheet, automate the reading and transfer it to the database. Add exception handling and error logging to the system. Parallel execution is to be maintained as well.

5. Report generation: Data mining and result report is generated. These results are the outcome of the queries, and efficient queries are used as data is usually huge. Reports are generated when the data goes to the system, and their data is manipulated at the controller level.

Types of ETL Testing

1. Production Validation Testing: The database at the production server is tested here for accuracy. Usually, the testing is done at the UAT server, but ensuring the database connected to the live action is correct comes under production validation.

2. Source-to-Target Testing: During data transfer via an API, data is validated to be correct and comes under source-to-target testing.

3. Application Upgradation Testing: When the product upgrades, that is, new features are added, and some might be removed, the database is still fine. Also, upgradation impact database schema. So, previous data is protected, and new changes are validated here.

4. Metadata Testing: Metadata tests the data type, length, constraints, index, and keys, i.e., database schema.

5. Data Completeness Testing: Data completeness testing ensures the exact data is stored in the database. The transaction is maintained, and multiple tables reflect the data. In case of failure, rollback is done from all the affected tables.

6. Data Accuracy Testing: Correct data is stored with the data type, and data is transferred similarly.

7. Data Transformation Testing: Data insertion is different from the data. For example, in a database, usually, everything is saved in uppercase, dates are stored differently, data is inserted into different tables, and some mathematical and pattern operations are done. Validating these operations come under data transformation testing

8. Data Quality Testing: Data quality testing ensures null checks are maintained and references and unique IDs are maintained.

9. Incremental ETL testing: Incremental testing ensures updates and inserts are working correctly on the data.

10. GUI/Navigation Testing: This testing ensures the data on the front-end application is correct.

ETL Test Preparation

ETL testing validates the data getting stored in the source is accurate. The data transferred from the source to the target is in the correct format. The tester validates the schema, architecture, business logic, data itself, and data mapping at all stages.

Create ETL Test Case

| Test Scenario | Test Case Description | Test Steps | Expected Result |

|---|---|---|---|

| Mapping Document Validation | Validate the mapping document for ETL process | 1. Review the mapping document | Mapping document adheres to the defined specifications |

| 2. Verify the mapping rules and transformations | Mapping rules are accurate and align with the requirements | ||

| Validation | Validate the data extracted from the source system | 1. Extract data from the source system | Extracted data matches the expected format and structure |

| 2. Compare extracted data against validation rules | Data passes validation checks according to defined rules | ||

| Constraint Validation | Validate data against defined constraints | 1. Extract data from the source system | Extracted data adheres to defined constraints |

| 2. Apply constraint validation rules | Data meets all the defined constraints and rules | ||

| Data Consistency Issues | Identify and address data consistency issues | 1. Extract data from multiple sources | Consistent data across all sources is ensured |

| 2. Compare and verify data consistency | Data consistency issues are identified and resolved | ||

| Completeness Issues | Identify and handle incomplete data | 1. Extract data from the source system | Extracted data contains all the required fields |

| 2. Check for missing or incomplete data | Incomplete or missing data is identified and addressed | ||

| Correctness Issues | Identify and address data correctness issues | 1. Extract data from the source system | Extracted data is accurate and correct |

| 2. Verify data against business rules and logic | Data correctness issues are identified and resolved | ||

| Transformation | Validate data transformations during ETL process | 1. Retrieve extracted data for transformation | Data is transformed correctly based on defined rules |

| 2. Apply transformation rules and logic | Transformed data matches the expected output | ||

| Data Quality | Perform data quality checks on extracted and transformed data | 1. Access extracted and transformed data | Data quality checks pass without any critical issues |

| 2. Execute data quality checks | Identified data quality issues are flagged and reported | ||

| Null Validation | Validate handling of null values | 1. Extract data from the source system | Extracted data handles null values appropriately |

| 2. Verify the treatment of null values | Null values are handled as per defined specifications | ||

| Duplicate Check | Identify and handle duplicate records | 1. Extract data from the source system | Duplicate records are identified and addressed |

| 2. Perform duplicate checks | Duplicate records are flagged and handled accordingly | ||

| Date Validation | Validate date formats and date-related rules | 1. Extract data containing dates | Dates are validated as per the defined formats and rules |

| 2. Verify the accuracy of date-related data | Date-related issues or inconsistencies are identified | ||

| Data Cleanness | Validate the cleanliness and integrity of data | 1. Extract data from the source system | Extracted data is clean and free from inconsistencies |

| 2. Perform data cleanliness checks | Data cleanliness issues are identified and resolved |

Types of ETL Bugs

1. GUI bugs: Bugs related to the GUI of the application, that is, the font, color, alignment, etc.

2. Boundary Value Analysis Bug: Bugs at the minimum and maximum values.

3. Equivalence Class Partitioning Bug: Bugs at validation.

4. Input/Output Bug: Invalid output or valid input not accepted bugs.

5. Calculation Bug: Bugs at mathematical or pattern operations.

6. Load Bug: Functionality breaking down on load on the server.

7. Version Control Bug: Version information not maintained bug.

8. Hardware Bug: Hardware devices not working/responding bug.

ETL Testing in Data Transformation

- Validating data at the source and target end.

- Quality and integrity of data being maintained.

- Conversion of data in the rational database to JSON format.

Performance Testing in ETL

Performance Testing in ETL ensures the database can handle multiple users operating on it simultaneously. One of the most common tools for the same is Informatica. Here, the session is managed by multiple users, and security is the primary concern. The system should neither slow nor crash, and performance bottlenecks must be avoided.

Responsibilities of an ETL Tester

- Applying business transformation logic.

- Create and execute ETL test cases.

- Data transfer and creation of test flat file.

- Verification using SQL queries.

Differences Between the ETL and the Database Testing

| ETL Testing | Database Testing |

|---|---|

| ETL testing is the journey of data from the database to the target source. | Database testing's only focus is on the accuracy of the data and that the schema structure is maintained. |

| Validates the data from the database to the controller is mapped correctly. | Validate the data is correct in the database |

| ETL tester must know about API and transformation of data. | Database tester should be a master of queries and architecture of the database. |

| Database testing is a part of ETL testing. | Database testing ensures best practices of the database are used. |

ETL performance Testing

The following steps are taken to assess the performance of ETL testing:

- Identify the data load that has been transformed in production and generate new data with the same load or transfer it from the production environment to a local server.

- Temporarily disable the ETL process until the required code is generated and retrieve the necessary data from the database table.

- Record the most recent ETL run and re-enable the ETL process. It will undergo sufficient stress to transform the entire load that has been created and execute it.

- Data is being counted upon successful completion of the ETL process.

Data Accuracy in ETL Testing

The following steps should be undertaken to perform data accuracy testing:

1. Value Comparison: This step involves comparing the data in the source and target systems with minimal or no transformation. ETL testing can be conducted using various ETL tools, such as utilizing the Source Qualifier Transformation in Informatica.

2. Expression Transformation: Data accuracy testing can also include performing expression transformations. SQL statements employing a set of operators can be used to validate the accuracy of data in both the source and target systems.

3. Critical Data Column Validation: To ensure accuracy, it is important to verify the columns containing critical data. This can be achieved by comparing the distinct values present in the source and target systems.

ETL testing in Data Transformation

To conduct ETL testing for data transformation, the following key steps should be taken:

- Begin by creating a scenario that outlines the input data and the expected results. It is crucial to validate the ETL testing approach with the business customer. ETL testing serves as an effective means of gathering requirements during the design phase and can be incorporated as part of the overall testing strategy.

- Proceed to generate test data based on the established scenario. The ETL developer can automate the process of populating datasets, using a scenario spreadsheet that allows for flexibility and adaptability as the situation evolves.

- Employ data profiling techniques to compare and evaluate the range and distribution of values in each field between the source and target datasets.

- Verify the accurate processing of ETL-generated fields, such as surrogate keys, to ensure their correct implementation.

- Validate that the data types within the data warehouse align with the specifications outlined in the data model or design.

- Construct data scenarios between tables to test referential integrity, ensuring that the relationships between related data entities are maintained correctly.

- Confirm the parent-to-child relationships within the data, ensuring the consistency and accuracy of these associations.

- Lastly, perform the lookup transformation in which the lookup query should be straightforward, without requiring additional data retrieval, and should ideally return only one value based on the source table.

Best Practices for ETL Testing

- Data loss and truncation of data have to be eliminated.

- A robust transaction channel has to be maintained from client to database.

- Unit testing with exception handling and logs needs to be done.

- Data has to be loaded into the warehouse and security needs to be maintained.

Conclusion

- ETL testing is a type of software testing where the tester focuses on the data journey from one source to another.

- Database testing is a sub-part of ETL testing

- Data must be extracted from the source, transformed into different formats, and loaded into the warehouse.

- Data loss must be zero during the entire cycle.